Hands-Free List Navigation

To fulfill requests, Bixby will often ask a user to make a selection from a list of options. If the user asks to make dinner reservations at a good Italian restaurant, Bixby might present several restaurants to select from; to make the reservation, Bixby will also need the user to choose the party size and the reservation date and time. Capsules can get selections from users by presenting views with lists on the screen of their phone or other device. In hands-on mode, users can interact with those views by tapping on their selections.

But interacting with views through touch isn't always desired, or even possible. A user might be talking with Bixby while driving, or might need to always interact with their device in a hands-free or eyes-free mode due to accessibility reasons. The user might simply prefer hands-free mode. The user might also prefer to use the Bixby button to activate Bixby, but to speak with it instead of tapping items on a screen. And, Bixby might be running on a device that doesn't have a visual interface. So Bixby needs a way to let users navigate through and select items from lists entirely by voice. We call this Hands-Free List Navigation.

For more information on design considerations when developing for hands-free versus hands-on mode, including when to use the various navigation modes, see the Hands-Free and Multiple Devices Design Guide.

Make sure any text you write for Bixby follows the dialog best practices as well as the Writing Dialog Design Guide.

If users are in hands-free mode, they are subject to being locked into the conversation with Bixby for a period of time. For more information, see Capsule Lock and Modality in the Guiding Conversations Developers' Guide.

Navigation Modes

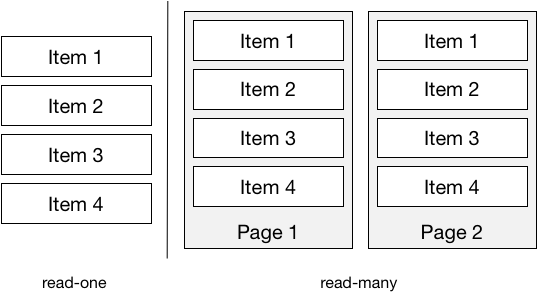

Both result views and input views can have a navigation mode for hands-free list navigation. The navigation mode chosen tells Bixby how to present options in a list. Depending on the mode, Bixby will listen for specific navigation commands.

There is always a list navigation mode, even when one isn't explicitly declared. If you don't include a navigation-mode block, Bixby will use the read-many mode, with a page-size set to the total number of items.

You can test your hands-free navigation in the Simulator. When a user is on a real device, however, hands-free mode is activated by saying the wake phrase "Hi Bixby". (In contrast, hands-on mode is activated implicitly by users pressing and holding the Bixby button on the device. Bixby-enabled watch devices can only be put in hands-free mode when they are paired with Bluetooth headphones.)

read-one

The read-one mode renders each item in the list and reads them aloud one at a time, asking the user if they want that one (an item-selection-confirmation prompt). The user has several navigation commands available.

- An

affirmativeresponse will select that item - A

negativeornextresponse will go onto the next item - A

previousresponse will return to the last item - A

repeatresponse will repeat the current item - A

cancelresponse aborts the list navigation - An ordinal selection response (such as "first", "second", or "third") can be used to select specific items in the list

- A response that matches or partially matches an item in the list can be used to select specific items in the list, as long as voice content selection is enabled

- A

select-noneresponse skips an optional input, leaving its value unset (this option is not available if the input is required)

read-one-and-next

The read-one-and-next mode renders each item in the list and reads them aloud one at a time, asking the user if they wish to go on to the next item after each one with a next-item-question prompt. This is similar to read-one, but changes the meaning of "Yes" and "No":

- a

negativeorcancelresponse aborts the list navigation - An

affirmativeornextresponse will go onto the next item - A

previousresponse will return to the last item - A

repeatresponse will repeat the current item - An ordinal selection response (such as "first", "second", or "third") can be used to select specific items in the list

- A response that matches or partially matches an item in the list can be used to select specific items in the list, as long as voice content selection is enabled

- A

select-noneresponse skips an optional input, leaving its value unset (this option is not available if the input is required)

This is useful if the user doesn't need to get more information (like in a details view) on items within the list, but only needs the summaries read, letting them go through results more quickly. This mode is only allowed in a result-view, not an input-view.

read-many

The read-many mode reads each item in the list aloud in pages of more than one at a time, asking the user if they want a selection from that page or would like to go on to the next page (a page-selection-confirmation prompt).

- An

affirmativeresponse prompts the user to select one of the items in that page by ordinal value (such as "first" or "second"), and use that as the selection - A

negativeornextresponse goes to the next page - A

previousresponse returns to the last page - A

repeatresponse repeats the current page - A

cancelresponse aborts the list navigation - An ordinal selection response (such as "first", "second", or "third") can be used to select specific items within the page

- A response that matches or partially matches an item in the list can be used to select specific items in the list, as long as voice content selection is enabled

- A

select-noneresponse skips an optional input, leaving its value unset (this option is not available if the input is required)

The distinction between read-one and read-many is that read-one presents items to the user one item at a time, asking them after each item whether it's the one they want to choose from the list, while read-many presents items grouped in pages, asking them after each page whether they wish to select any of the items on that page.

read-many-and-next

The read-many-and-next mode reads each item in the list aloud in pages of more than one at a time, asking the user if they want to go onto the next set after each page is read (a next-page-confirmation prompt). This is similar to read-many, but changes the meaning of "Yes" and "No":

- A

negativeorcancelresponse aborts the conversation - An

affirmativeornextresponse goes to the next page - A

previousresponse returns to the last page - A

repeatresponse repeats the current page - A

cancelresponse aborts the conversation - An ordinal selection response (such as "first", "second", or "third") can be used to select specific items in the list

- A response that matches or partially matches an item in the list can be used to select specific items in the list, as long as voice content selection is enabled

- A

select-noneresponse skips an optional input, leaving its value unset (this option is not available if the input is required)

This is useful if the user doesn't need to get detail views on items within the list, but only needs the summaries read, letting them go through results more quickly. This mode is only allowed in a result-view, not an input-view.

read-manual

The read-manual mode pages through a list of results, similar to read-many, but lets a capsule execute custom goal actions when the user moves forward and backward in a result list. These actions could, for example, use an API's pagination cursor to request specific result sets from a web service.

- An

affirmativeresponse prompts the user to select one of the items in that page by ordinal value (such as "first" or "second"), and use that as the selection - A

negativeornextresponse goes to the next page - A

previousresponse returns to the last page - A

repeatresponse repeats the current page - A

cancelresponse aborts the list navigation - An ordinal selection response (such as "first", "second", or "third") can be used to select specific items within the page

- A response that matches or partially matches an item in the list can be used to select specific items in the list, as long as voice content selection is enabled

- A

select-noneresponse skips an optional input, leaving its value unset (this option is not available if the input is required)

For more details, read the read-manual documentation. The read-manual mode is allowed in both result-view and input-view.

Bixby's behavior for read-many and read-many-and-next will change when it determines that the last highlight group will have only a single item on it and the defined page-size is larger than 1. In this case, the single item will be added to the next-to-last group, rather than presenting the user with a final one-item group. For example, if the page-size is 5 and there are 11 items total, the first group will be 5 items, and the second group will be 6, "folding in" the single remaining item. If there are 12 items total, there will be three groups of 5, 5, and 2 items.

Because of this behavior, you must not mention or rely on a hard-coded page-size value! In dialog, use the Expression Language pagination functions in responses.

read-none

The read-none mode does not read any items from its list of options aloud, instead just prompting the user to make a choice. This should be used when the possible choices are implicit or obvious: for instance, when the user must choose a day of the week. You would not want to have Bixby read the name of each day of the week aloud as part of the prompt.

In addition to the selections, the user has a smaller set of implicit options:

- A

cancelresponse aborts the conversation - A

select-noneresponse skips an optional input, leaving its value unset (this option is not available if the input is required)

There is no pre-defined behavior for navigation commands if the user offers an answer that does not match a navigation command (for instance, saying "find an Italian restaurant" in response to "what is your party size"). It's up to the capsule to handle such situations gracefully. Read the information about Prompting the User and how to handle off-topic responses .

Navigation Commands

The responses available to users in the navigation modes are defined in navigation-commands blocks of your navigation-support file. These blocks contain keys such as next, previous, and repeat, with response keys that define vocabulary for those responses. Here's a simple example:

navigation-support {

match: _

navigation-commands {

next {

response (next)

response (more)

}

previous {

response (previous)

response (back)

}

item-selection-confirmation {

affirmative {

response (yes)

}

negative {

response (no)

}

}

ordinal-selection-patterns {

pattern ("the (first)[v:viv.core.CardinalSelector:1]")

}

}

}The

navigation-supportblock starts with a match pattern. This defines the concepts that these responses will apply to. These are general responses, which can apply to any concept, so we use the wildcard match. But we could provide more specific language for concepts if it was appropriate to the capsule.Confirmation commands like

item-selection-confirmationornext-page-confirmationhave child blocks foraffirmativeandnegative.You can define synonyms like

nextandmoreby providing more than one response in a command block.You can support custom ordinal selection by defining an

ordinal-selection-patternsblock. This one uses the predefinedviv.core.OrdinalSelectorprimitive concept.

The commands included in the above example are taken from a generalized set included in viv.core, which covers a wide variety of common responses for "next", "previous", "yes", "no," and so on. In practice, you might not have to define any extra navigation commands with navigation-support at all. Consider adding extra commands when users are likely to use them with your capsule's specific domain. For instance, a capsule that books reservations for hotels and restaurants could accept "book it" or "make the reservation" as an affirmative confirmation response. User testing can also give you guidance for navigation-commands to add: if users frequently try to use the same or similar utterances for navigating your capsule that fail, those responses are good candidates for custom navigation responses. For instance, to add extra confirmations for booking a hotel:

navigation-support {

match: Hotel

navigation-commands {

item-selection-confirmation {

affirmative {

response (book it)

response (make the reservation)

}

}

}

}Responses defined in navigation-commands must be exact matches to user utterances. That is, to match yes, the user must say "yes", not "I think so, yes, please" or other phrases that contain or are otherwise similar to phrases defined as defined responses.

The navigation commands listed in navigation-support are not dependent on whether the user is in hands-eyes free mode, so you should ensure they should work both when the user is pressing down the Bixby button and responding, and when they are responding to paginated navigation.

For example, if the user is on a hotel capsule's details page and the select-button-text for the page says "book it", define the "book it" utterance as an affirmative item of the item-selection-confirmation block, so Bixby can properly process and select the hotel.

Hands-Free List Navigation in Views

The navigation-mode block can be added to either input or result views:

- In a

selection-ofblock of an input view - In a

list-ofblock of a result view

The navigation-mode block contains a child block for the specified navigation mode (read-many). This block, in turn, defines statements and questions used by Bixby in this conversation moment.

Here's a simple input view, from a capsule that allows users to book space resorts:

input-view {

match: SpaceResort (result)

message ("Which space resort would you like?")

render {

if (size(result) > 1) {

selection-of (result) {

select-button-text("Book")

where-each (item) {

macro (space-resort-summary) {

param (spaceResort) {

expression(item)

}

}

}

}

}

}

}To add hands-free list navigation to this input view, we might choose the read-one mode, since there won't be more than a few results available after a resort search.

input-view {

match: SpaceResort (result)

message ("Which space resort would you like?")

render {

if (size(result) > 1) {

selection-of (result) {

navigation-mode {

read-one {

list-summary ("I found #{size(result)} resorts.")

page-content {

underflow-statement (This is the first resort.)

item-selection-question (Do you want to book this resort?)

overflow-statement (Those are all the resorts that meet your search.)

overflow-question (What would you like to do?)

}

}

}

select-button-text ("Book")

where-each (item) {

macro (space-resort-summary) {

param (spaceResort) {

expression (item)

}

}

}

}

}

}

}The read-one block defines statements and questions Bixby uses to guide the user through voice navigation.

list-summaryis an optional key that can be used to provide a summary statement; in this case, it's how many resorts Bixby found in a search.- The

underflow-statementis what Bixby says if the user asks for the previous item in the list when they're on the first item. - The

overflow-statementis what Bixby says if the user asks for the next item in the list when they're on the last one. - The

overflow-questionis a prompt read after theoverflow-statement. (There is no corresponding question for underflow.) - The

item-selection-questionis a yes-or-no question asking whether this is the item the user wants to select. page-markeris an optional key (not shown here) that defines spoken summaries for sets of paged items. See its documentation for an example of its usage.with-navigation-conversation-driversis an optional key (not shown here) that, when included, will add localized, system-provided conversation drivers for "Next" and "Previous" paging commands. If you are using runtime version 5 or later, or if you specify theauto-insert-navigation-conversation-driversruntime flag, then this key is automatically enabled.

The modes share keys, but some keys are specific to certain modes. The read-many and read-many-and-next modes have extra keys relating to paging. If we wanted to use read-many for the space resort selection example, we could use something like this:

...

navigation-mode {

read-many {

page-size (3)

list-summary ("I found #{size(result)} resorts.")

page-content {

underflow-statement (This is the first page.)

page-selection-question (Do you want one of these resorts?)

item-selection-question (Which resort would you like?)

overflow-statement (Those are all the resorts that meet your search.)

overflow-question (What would you like to do?)

}

}

}

...page-sizecontrols how many items are shown per page.page-selection-questionasks about the page as a whole, not an individual resort.item-selection-questionis asked when the user responds affirmatively to thepage-selection-question. It expects an ordinal response (for example, "the second one"), not yes or no.

For more information, read the discussion of each individual navigation mode in the reference section.

Other Functionality

Your capsule does not need to include special handling for certain list navigation functionality that Bixby supports, but as a developer, it's good for you to know about these.

Voice content selection allows users to choose items in a list by giving a response that matches or partially matches an item in the list, for example, "Chicken Alfredo" in a list of recipes. This is enabled by default, but can be disabled with an override either globally or within an

input-vieworresult-view.When Bixby is displaying a detail view or a detail page defined within a result view of an item in a list, next and previous responses will navigate to the next or previous item detail rather than returning to the list, even if the next or previous item would be on a different page of results. If there is no next or previous item (that is, the user says "previous" at the first item or "next" at the last), Bixby will inform the user.

If users are in hands-free mode, they are subject to being locked into the conversation with Bixby for a period of time. For more information, see Capsule Lock and Modality in the Guiding Conversations Developers' Guide.