Simple Search Capsule

About This Capsule

This sample capsule shows how to include basic search in a capsule. The user can start with a simple utterance, such as "Find me some shoes," or do a more specific search, such as "Find me dancing shoes."

The capsule responds with a summary of results if the user request matches two or more results. If the request matches a single result or if a user selects one of the results, they see a detailed view, which uses a lazy source to get additional details about the shoe.

Also included is dialog customization to handle responses for results.

The capsule also includes these training entries:

- "Find a shoe for me!": No annotations.

- "Find a dance shoe for me!": Annotation for shoe type.

- "Find a dance shoe less than $85": Annotations for currency symbol and currency value, with a role of

viv.money.MaxPrice; also an annotation for shoe type.

Because you cannot submit a capsule with the example namespace, in order to test a sample capsule on a device, you must change the id in the capsule.bxb file from example to your organization's namespace before making a private submission.

For example, if your namespace is acme, change example.shoe to acme.shoe.

Files Included in the Sample Capsule

The following folders and files are included in the sample capsule. More information about what these files do and how they interact with each other will be explained further in the walkthrough.

README.md- File that includes the latest updates to the sample capsule files.assets/images- Folder of images used in the results to display to the user.capsule.bxb- File that contains the metadata Bixby needs to interpret capsule contents.code- Folder of JavaScript files that actually execute the searches modeled in the actions.lib- Folder of JavaScript files, which contain the shoes and accessories data in JSON format.

models- Folder holding the different model types:actionsandconcepts.resources- Folder of resources, which are organized by locale. In this case, only US-English is supported.base- Base resources used in all targets.layouts- Folder containing the layout and view files.capsule.properties- File containing configuration information for your capsule.endpoints.bxb- File that specifies which JavaScript functions map to each of action model.

en-US- Folder containing files for the US-English locale.dialog- Folder of Dialog files that determine what Bixby tells the user.training- Folder of training files that enable you to teach Bixby how to understand your users. You do not edit these files directly but instead interact with training via Bixby Developer Studio.vocab- Folder ofVocabularyfiles that help Bixby recognize different shoe types. This capsule only has onevocabfile (Type.vocab.bxb).

stories- Folder containing stories, which test if Bixby is acting as expected. You normally interact with these files in Bixby Developer Studio and do not edit them directly.

Sample Capsule Goals

The example.shoe sample capsule and this guide highlights and explains the implementation of the following platform features:

- Exercise a simple Summary to Details workflow

- Use lazy properties in a Details layout

- Train with a simple goal with values and goals

- Implement simple dialog customizations

- Showcase simple layout customization using core platform styles

Sample Capsule Walkthrough

This capsule specifically highlights a user doing a basic search for an item listed in a provided JavaScript file that contains JSON content. The user initiates the conversation by asking Bixby to find shoes ("Find me shoes!"). The user can be more specific by adding type or price parameters ("Find me hiking boots!" or "Find me shoes less than $50!"). If the user is not specific enough, Bixby provides a list of results that the user can then browse. If they select a shoe, further details are shown.

Initialization of Search

Primarily, users can search for shoes using this capsule. They might need to narrow their search by shoe type or price. No matter how broad the user's search query is for this capsule, the capsule goal remains the same: a shoe, specifically the structure concept example.shoe.Shoe, which is handled in the Shoe.model.bxb file and is referred to as Shoe for the rest of this walkthrough. As the training recommendations state, goals should typically be a Concept for non-transactional use cases.

If you take a closer look at Shoe, you see that it is modeled as a structure concept, with several properties that can narrow a user's search:

name- The user can specify the name of the shoe, for example "Find me bowling shoes".type- The user can specify the type of shoe, for example "Find me athletic shoes". Notice that this concept is an Enum type and only the types listed in this file are acceptable values in the query.price- The user can specify how much they want to spend on shoes, for example "Find me shoes that are less than $100".

There are also a couple of other properties defined. While users aren't expected to query this information directly, it is useful information for them to know.

description- Descriptive text for the user.accessories- Accessories that the shoe might need.photo- A URL of a photo of the shoe to display to the user.

The description, name, type, and accessories properties are mapped to primitive-type models that are also in the capsule, as explained in Modeling Concepts. However, because the viv.money capsule already exists, capsule.bxb imports viv.money instead. As a result, rather than having another model for prices within the example.shoe capsule, the capsule uses the viv.money.Price structure instead. Importing capsules can save you from having to model redundantly.

capsule-imports {

import (viv.core) { as (core) }

import (viv.money) {

as (money) version (2.22.43)

}

}

Important: This capsule only expects English-speaking users on mobile devices, as specified in the capsule.bxb file within the target key. For more information on targets, see the target reference information.

targets {

target (bixby-mobile-en-US)

}

See the capsule reference page for more information.

Find Search Results

Once the planner recognizes the goal, Bixby needs to find the shoes in the query, pulling in a number of actions and concepts. When the planner generates this program, it uses the FindShoe action model in order to satisfy the goal. Since this capsule's sole purpose is to find shoes, FindShoe.model.bxb has type Search. It takes in several optional inputs (name, type, minPrice, and maxPrice) and returns one or many Shoe structures in return. Note that the output key does not and cannot specify how many results should be returned.

FindShoe maps directly to the FindShoe.js file via local endpoints, as specified in the endpoints.bxb file by pointing the FindShoe action-endpoint to the local-endpoint FindShoe.js:

action-endpoint (FindShoe) {

accepted-inputs (name, type, minPrice, maxPrice)

local-endpoint ("FindShoe.js")

}

The FindShoe.js file simply filters the shoes.js JSON file and returns shoe objects that match the inputs specified by the user query. The shoe objects in shoes.js have properties that correspond directly with the properties in the Shoe concept model. While this capsule uses "mock data" by providing the JSON files with a limited number of items, you can use the same setup for search if you need to access non-local data.

Notice that there is an additional action model and corresponding JavaScript file in this capsule, FindAccessories. Because each shoe structure can have multiple accessories properties, populating that information can be time consuming and resource intensive for every single shoe object returned. Instead, Bixby can populate that information on demand by calling FindAccessories, such as when a user is looking at a single shoe's details. This is called a lazy property and you define this in the Shoe structure. This is used later when the the user gets to a detailed view of the results.

Returning Results

After Bixby finds the shoe(s), it needs to return the results to the user. This is when you start to consider dialog and views.

Dialog

Dialog enables you to customize how Bixby tells the user what was found. The example.shoe capsule provides three dialog files:

Shoe_Concept- Handles whether there is one shoe result or multiple shoes and changes text accordingly, using concept fragments.Shoe_Result- Lets the user know how many shoe results are returned, as well as which parameters were used in the query. Notice that it uses various Expression Language functions, such asspell()andlower(), in order to format the result to the specifications that the user queried.Shoe_Value- Displays the name of the given shoe. This also uses expression language, specifically thevalue()formatting function.

There is no dialog file for no results. This is because, in this instance, if Bixby can't find shoes that meet the criteria, they return all the results. For example, if you ask for clown shoes, Bixby won't find anything. Bixby decides to loosen its constraints and find all shoes because we've trained "Find a shoe for me!", which is a more generic search request. You could however choose to create a layout and dialog setup in your conditional flow of Shoe_Result.view.bxb to handle the case where no result are returned, specifically by catching the case when size(shoe)==0.

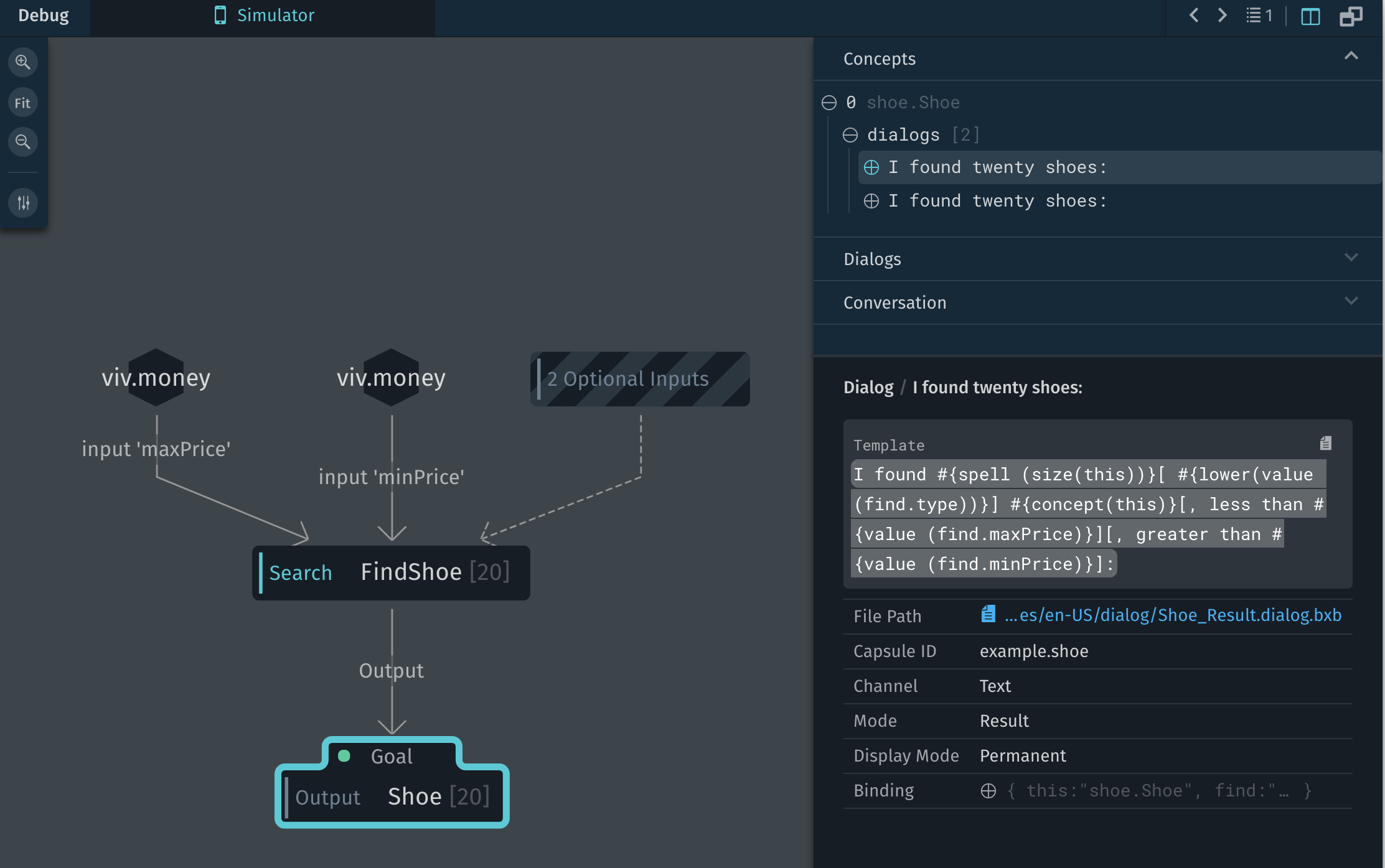

You can use the debugger to see the execution graph that Bixby made, as well as specifically see which dialog is called at specific times.

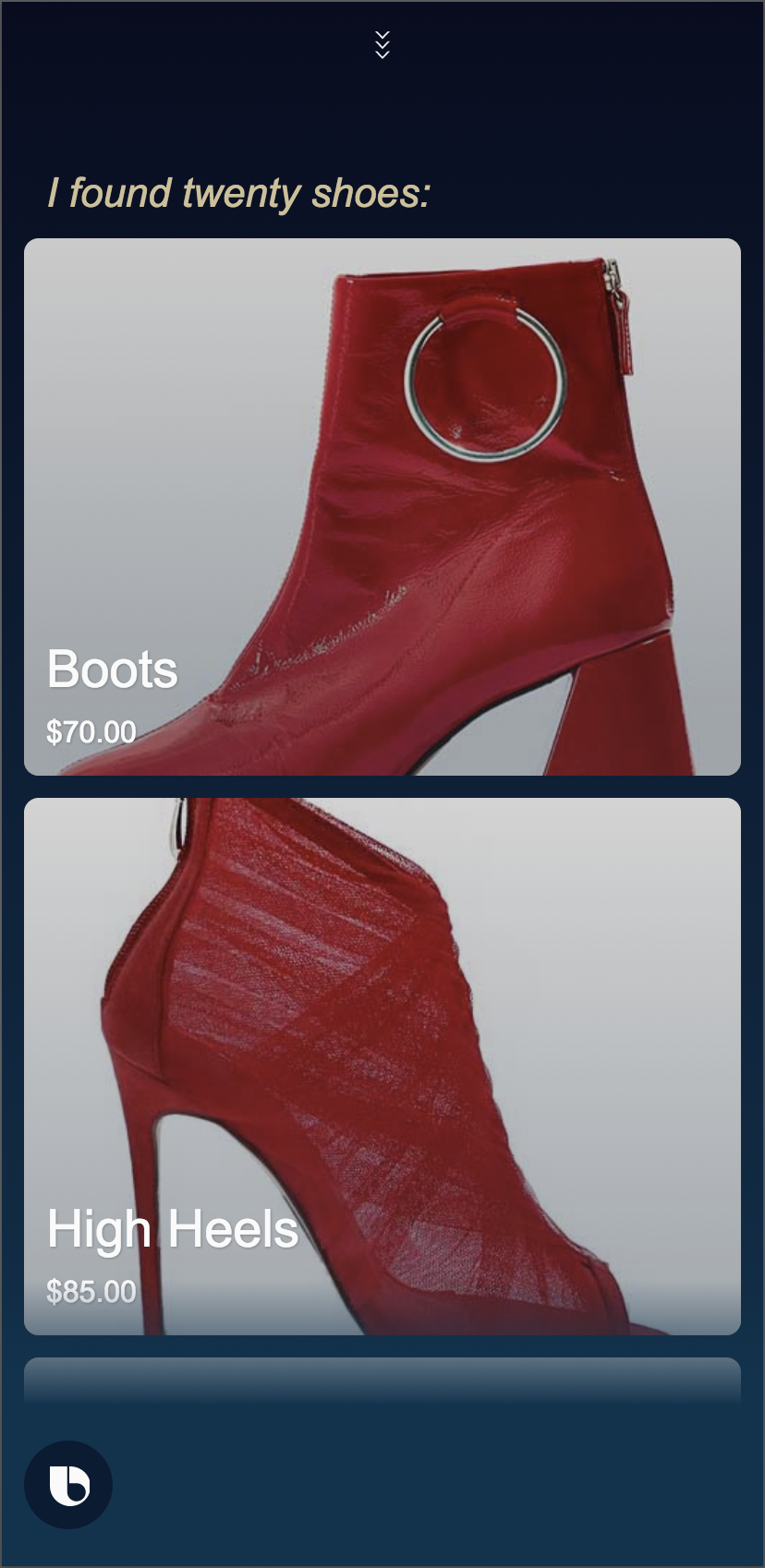

For instance, the dialog "I found twenty shoes:" was created using the template from the Shoe_Result.dialog.bxb file, under the US-English locale directory. You can further expand the dialogs node under the Concepts inspector window and select any of the words in that sentence structure to see which part of the template it corresponds to in the bottom Dialog X-Ray pane.

You can similarly use the debugger for inspecting other parts of your code, such as your models, functions, and conversation with Bixby.

Views

In addition to dialog, you can use a result-view to display customized results. Within a View file, you can also use layout macros. For example.shoe, the Shoe_Result.view.bxb file gets called whenever a Shoe result is outputted, due to the match pattern defined in that result view file:

match {

Shoe (shoe)

}

Using conditional flows, Bixby can determine what to display to the user depending on the number of results returned:

- If a user is not specific enough in the request or multiple results fit the query, Bixby lists all the possible choices using the

shoe-image-cardlayout macro.

if ("size(shoe) > 1") {

list-of (shoe) {

where-each (item) {

macro (shoe-image-card) {

param (shoe) {

expression (item)

}

}

}

}

- For queries with only one result, Bixby uses a number of layout macros:

layout {

macro (shoe-image-carousel) {

param (shoe) {

expression (shoe)

}

}

macro (shoe-details-header) {

param (shoe) {

expression (shoe)

}

}

macro (shoe-accessories) {

param (shoe) {

expression (shoe)

}

}

}

}

}

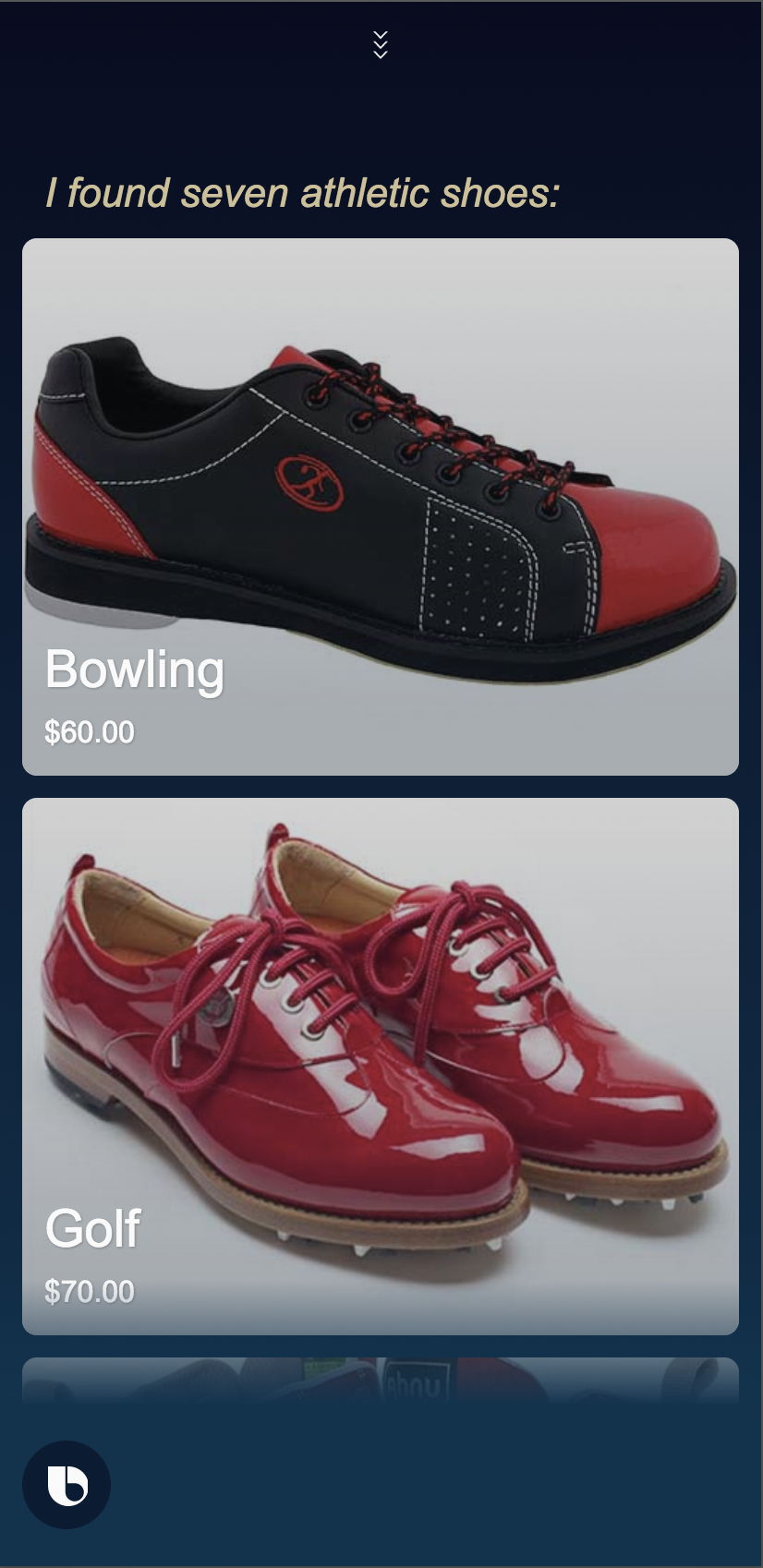

The show-image-card layout macro shows a summary of shoe results.

For each Shoe that needs to be displayed, it creates an image card component.

Bixby uses an image-card to show the shoe image, name, and price:

macro-def (shoe-image-card) {

params {

param (shoe) {

type (Shoe)

min (Required) max (One)

}

}

content {

image-card {

aspect-ratio (4:3)

image-url ("[#{value(shoe.images[0].url)}]")

title-area {

halign (Start)

slot1 {

text {

value ("#{value(shoe.name)}")

style (Title_L)

}

}

slot2 {

single-line {

text {

value ("#{value(shoe.price)}")

style (Detail_M)

}

}

}

}

on-click {

view-for (shoe)

}

}

}

}This top level information is all that really needs to be displayed when summarizing results, which is why an image-card is recommended. For more information on design guidelines, see Design Guides.

Detailed Results

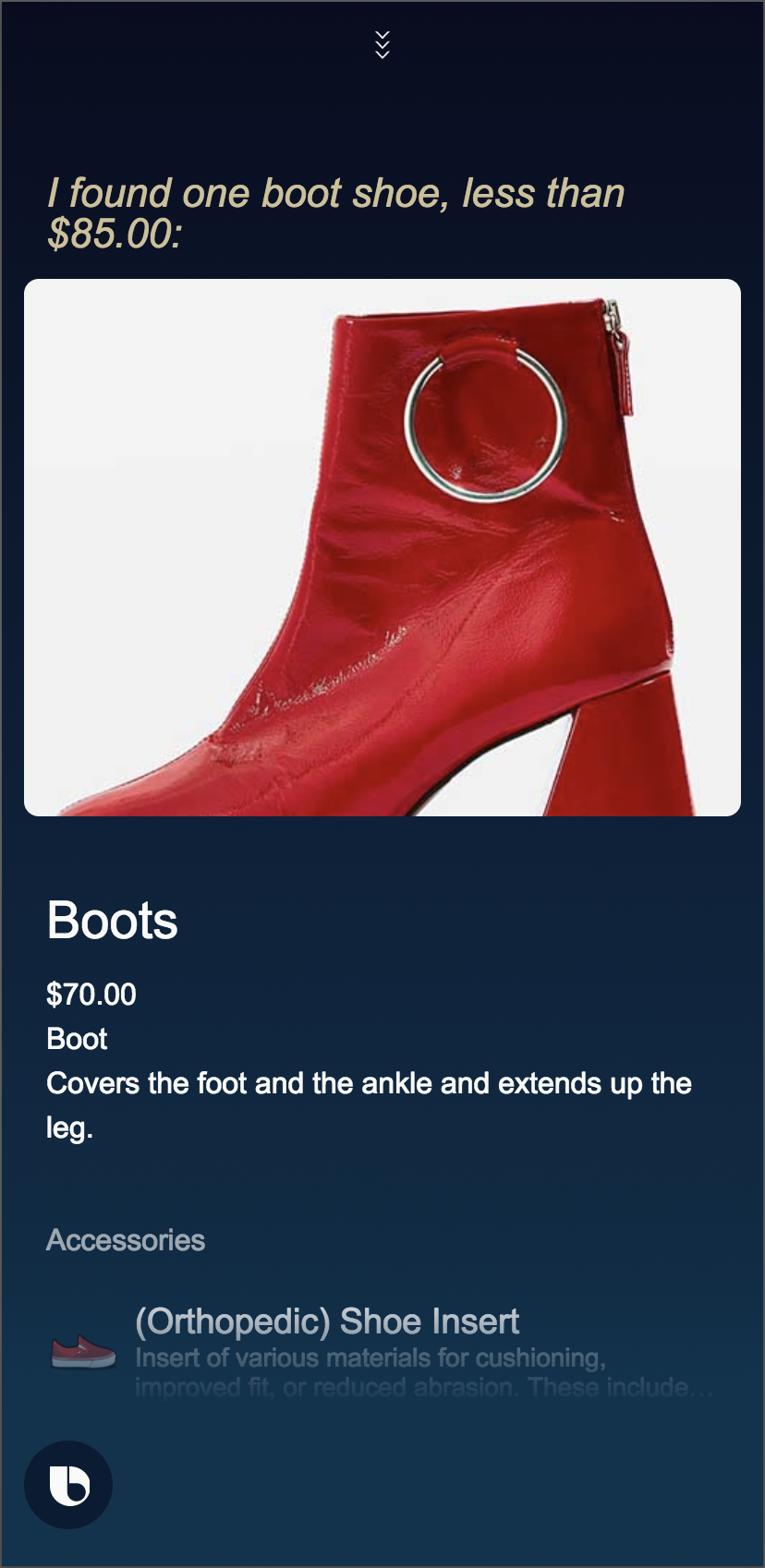

If there is a single result (size(shoe)==1) or if the user wants to take a closer look at one of the items, the layout provides information through three layout macros:

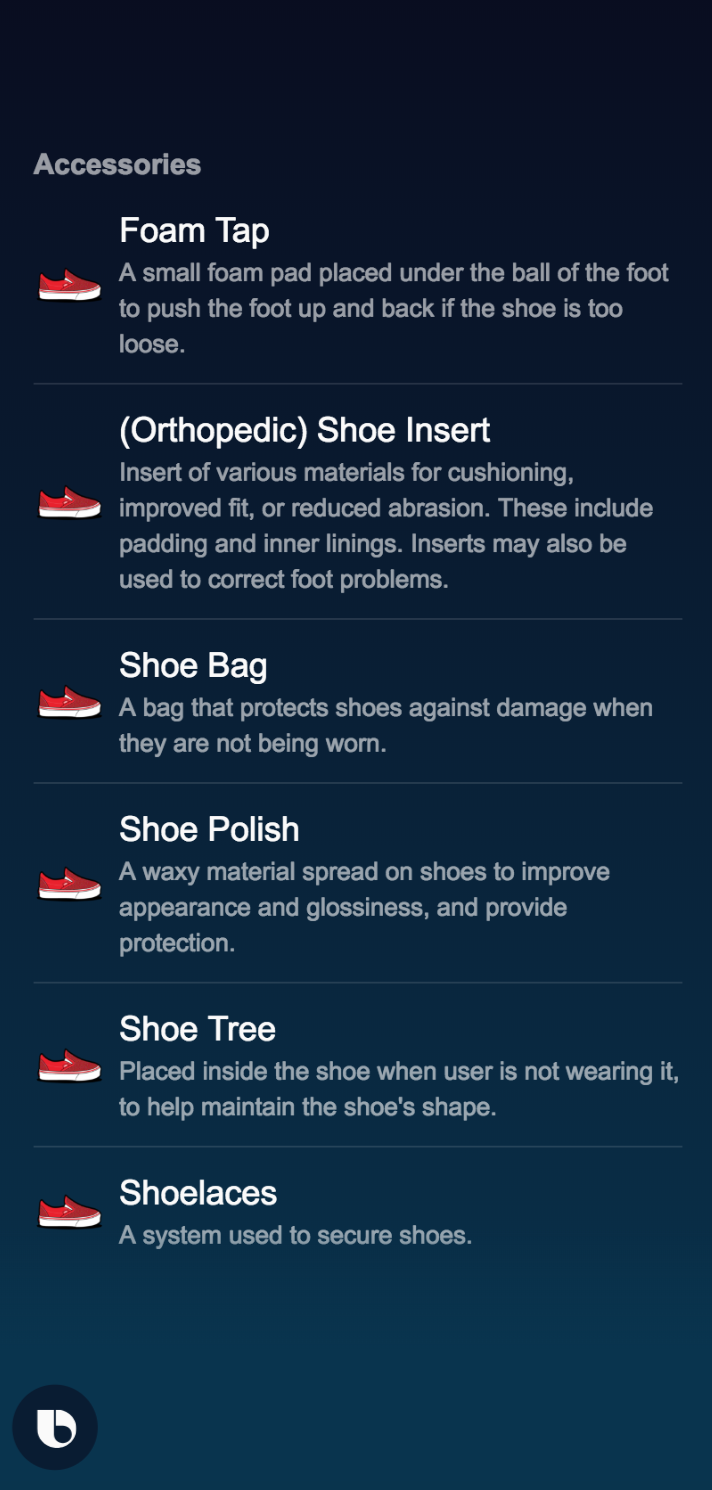

shoe-image-carousel: Shoe imageshoe-details-header: Shoe name, price, type, descriptionshoe-accessories: Accessory image, name, and short description

result-view {

match {

Shoe (shoe)

}

render {

if ("size(shoe) > 1") {

list-of (shoe) {

where-each (item) {

macro (shoe-image-card) {

param (shoe) {

expression (item)

}

}

}

}

} else-if ("size(shoe) == 1") {

layout {

macro (shoe-image-carousel) {

param (shoe) {

expression (shoe)

}

}

macro (shoe-details-header) {

param (shoe) {

expression (shoe)

}

}

macro (shoe-accessories) {

param (shoe) {

expression (shoe)

}

}

}

}

}

}

Specifically, it calls the shoe-accessories layout macro, which determines what information to show about the shoe's accessories. Each accessory is displayed in a cell-area component. By using layout macros, you can generalize and refine your UI as much as possible.

Note that this is where lazy properties come in, as briefly mentioned in the Find Search Results section. Bixby only needs to populate this information once and not for each result. Therefore, it is more efficient to call FindAccessories.js only when to get that information from shoes.js and accessories.js in the more detailed view.

Ending the Experience

After Bixby returns results, users can choose to browse through the results or get additional details from a particular item. After they look through results, however, what is expected of them afterwards? From here, the user can either make a new query or simply exit the experience. There are no follow-ups that the capsule needs to handle.

Training Your Capsule

So far, you've walked through a typical journey the user would take using this capsule. You've also learned how you should engineer the responses. This section discusses how to train the capsule, which in turn teaches Bixby typical queries regarding searching for shoes.

There are five training entries in this capsule, but these three will be discussed:

- "Find a shoe for me!": No annotations

- "Find a dance shoe for me!": Annotation for shoe type

- "Find a dance shoe less than $85": Annotations for currency symbol and currency value

All three entries have the same goal: example.shoe.Shoe. You should test each entry by running the Simulator to ensure that the results match expectations. You can also check the execution graph to see that it matches the general user workflow previously described. (Note: the entries listed here might not be in the order listed in Bixby Developer Studio.)

The "Find a shoe for me!" entry is the most generic, so only the goal is listed. You should see that Bixby returns all the results from shoes.js (click the Show More button to see all the results). Click on one of the items to see the detailed view.

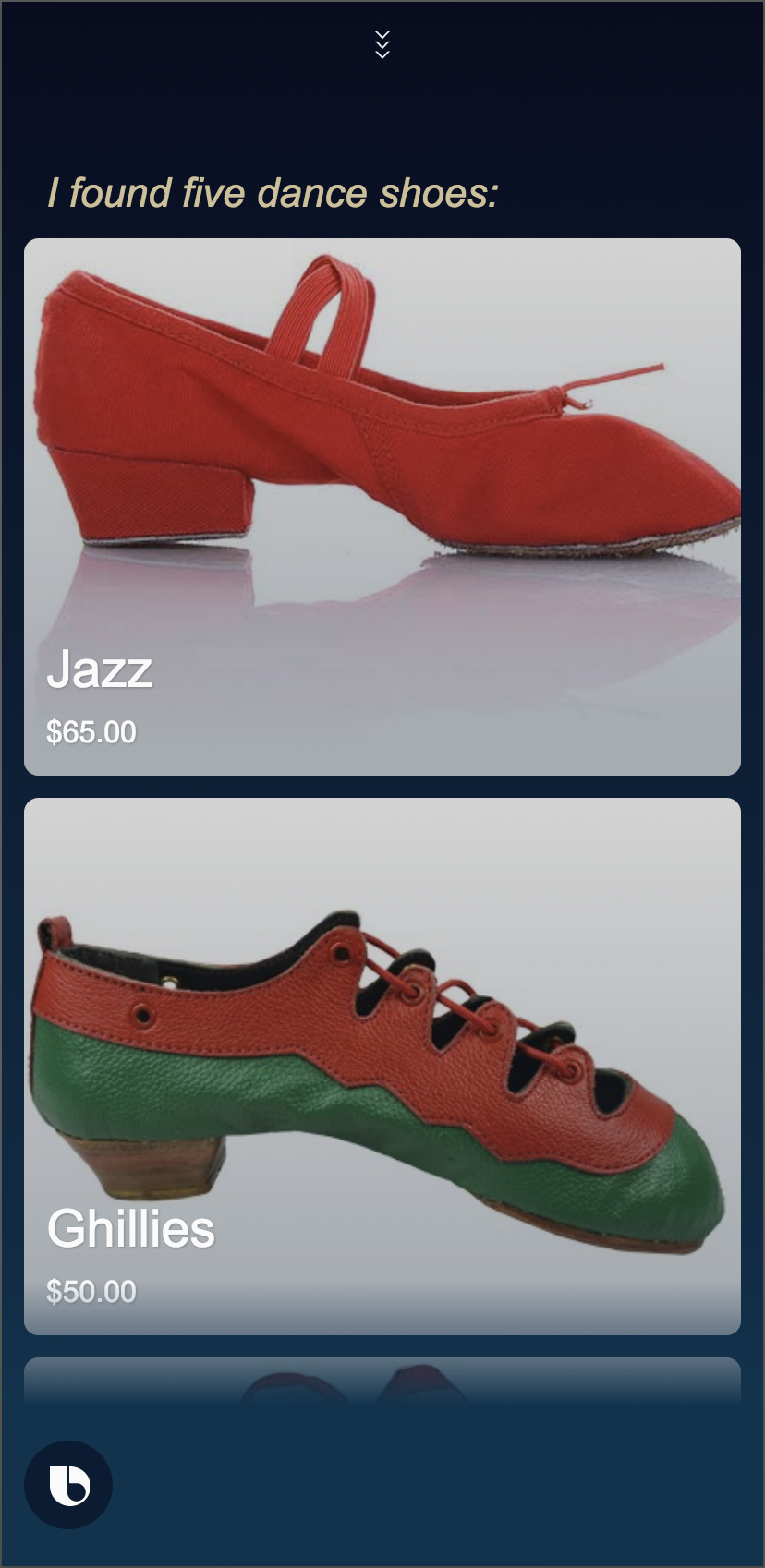

The "Find a dance shoe for me!" entry is a little more specific, in that the shoe type (dance) is annotated. The corresponding Type.vocab.bxb file teaches Bixby what words are equivalent to the listed Types. When run in the test console, a summary layout displays five dance shoes. Again, you can click on one of the items to view the details layout of that shoe.

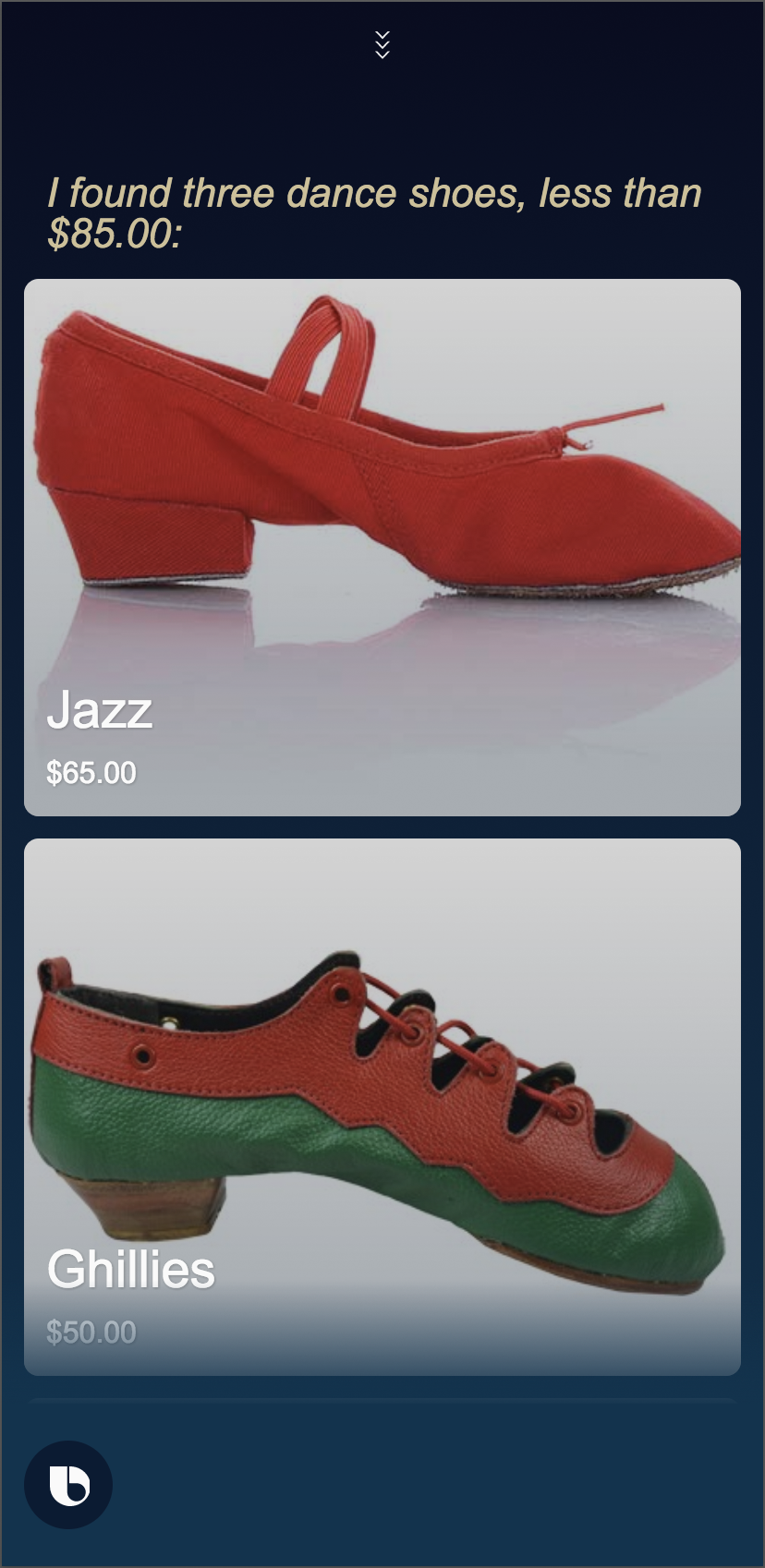

The "Find a dance shoe less than $85" is the most specific. The type is dance while the maxPrice is set to $85. Only three shoes are returned this time.

These three training entries, along with the other training entries in this capsule, start to teach Bixby how to understand queries. However, more training entries should definitely be added to make Bixby learn more. For more information on best practices for training, see Training Best Practices.

If you run a request in the Simulator like "Find me dance shoes" and then follow up with a different request like "Find shoes!", if you don't run the intent as an outer intent, the platform treats the second request as a continuation. To run a request as an outer intent, use Cmd + Shift + Enter.

Stories and Assertions

There are a few stories included in this capsule. Stories enable you to test your capsule through user stories. These stories included are all examples of the uses cases discussed previously. You can additionally use stories to check that the user workflow matches the engineering design discussed in this guide.

AthleticShoes- This story has a user utterance of "Find athletic shoes", which finds seven shoes that fit theathleticshoe type criteria.Boot- This story has a user utterance of "Find a boot", which finds a single instance of the shoe in question. In return, Bixby says I found one Boot shoe and displays the details view of the Boot.DanceShoe- This story has a user utterance of "Find some dance shoes". Bixby reports I found five Dance shoes and displays a summary result of the five dance shoes returned.Shoe- This story has two steps. In the first step, the user asks Bixby "Find some good shoes". Bixby reports I found nineteen shoes and displays all nineteen shoes. The second step simulates the user selecting the Boot shoe, and Bixby in turn displays the details of the Boot.

Training and Stories go hand in hand. While training Bixby, you should be checking that the workflow is giving expected results. Stories in turn let you repeatedly check that your code and training are following expected patterns.