Dialog Design Patterns

Principles

Bixby is designed to be a reliable, trustworthy, and friendly assistant. Whether users are talking, tapping, or pressing to interact with Bixby, it will feel like a unified experience. Bixby's dialog should follow these principles:

Speak naturally

Using everyday language and an appropriate tone of voice via SSML, Bixby sounds like your companion. By encouraging conversation, people interact with Bixby by communicating naturally.

Be helpful

Bixby helps decrease the time it takes to get a person to their goal. Through quick answers, guided questions, and predictive abilities, Bixby puts the user's needs first.

Adapt to the context

Bixby communicates seamlessly between devices, whether or not it has a screen and regardless of where a person is using the device. This ability to work across devices means that people can move in and out of hands-free mode during a single conversation.

Keep it simple

Bixby gives users the information they want in the least amount of words possible. With reduced cognitive load and using simple ways to navigate, people are empowered to interact with Bixby.

These principles guide designers and developers in building voice-first Bixby experiences. While there are many ways a conversation can occur across various devices, Bixby streamlines these experiences by using dialog patterns. Since users might not see an interface while interacting with Bixby, these principles and patterns are meant to deliver an enhanced voice experience to users.

Just as design systems are used to maintain visual interfaces, this design guide aims to apply that same framework to voice interfaces. By demonstrating common user Bixby interactions, designers and developers can reference this document to do the following:

- Understand the best ways Bixby can respond to a user.

- Create consistent Bixby interactions across devices.

- Apply dialog patterns toward similar use cases or new capsules.

- Confidently design and develop capsules without a dedicated UX team.

Patterns Overview

The voice-first design patterns cover Bixby's experiences along two axes:

- User Goals: Do they want to create something, take an action, or find information?

- Bixby's Needs: Does Bixby have the information it needs to continue the conversation or complete the request, or does it need to request more detail or clarify what it didn't understand?

Since conversations are a two-way interaction, many patterns link to others in order to continue the back-and-forth chain of what Bixby needs to achieve the user's goal.

User Goals

- Simple Creation: The user creates an object (such as an alarm or a timer) on a device. Bixby might ask for additional information.

- Simple Action: One of two actions occur (yes/no, on/off), typically interacting with another device that provides real-world feedback (such as turning lights on or off).

- Instant Reaction: Similar to Simple Action, but for cases where no dialog is necessary because it would interrupt an experience or the completed action is obvious.

- Look-Up: Bixby shows a query result after a user searches for information, such as contact information or web searches.

- Suggestion: Bixby provides a suggestion using personalization and learning features to narrow down choices after a search.

- List reading: Bixby provides a list without asking the user to take action.

- Summary and Fluid Reading: Bixby reads a summary, relevant information, and then a prompt to provide organized information at the user's pace.

Bixby's Needs

- Confirmation: Bixby prompts the user to make sure they are certain about a sensitive request (such as texting or making a purchase).

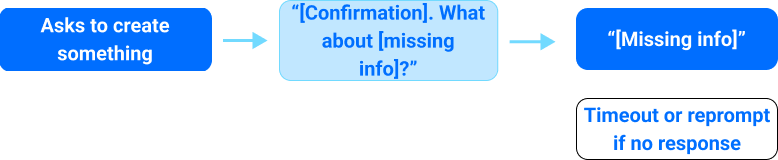

- Missing Information: The user answers Bixby's questions, but leaves out information required for Bixby to move to the next step.

- General Reprompt: If Bixby doesn't understand the user's intent or utterance, Bixby will rephrase the question to continue the conversation.

- Disambiguation: Bixby provides options that the user can choose from when there are a range of responses, such as when there are multiple results that require user selection.

- No Response: This offers options to the user after a General Reprompt when the user has not responded, or Bixby is unable to match their response to a valid intent.

Patterns for User Goals

Users talk to Bixby when they need help to do something. As an assistant, Bixby is there to complete the goals that a user sets out to do, which could mean making something new like setting an alarm, or finding the latest weather forecast.

For straightforward tasks that users need immediately, Simple Creation is best. When a user is taking an action that only has a couple choices (on or off, call or don't call), the Simple Action framework works best. Instant Reaction works similarly in cases where dialog is unnecessary.

When users need to find information, Look-Up gets them to their results. If there are many results, though, Bixby can either recommend one as a Suggestion or use List Reading to inform the user of several options. There are many ways to read out a list, but when there are different categories of information, using Summary and Fluid Reading could be best.

Simple Creation

The user creates an object such as an alarm or a timer on a device.

Simple Creation Examples

User: "Start a timer for 5 minutes"

Bixby: I started a timer for 5 minutes.

User: "Remind me to work out tomorrow morning at 9"

Bixby: I'll remind you to work out at 9 AM tomorrow.

User: "Set up lunch with Mary at 2 next Thursday"

Bixby: I added Lunch with Mary at 2 PM next Thursday, March 17.

Simple Creation Guidelines

- User goal: Create something that will be completed or accessed in the future.

- Requirements:

- Voice: Since Bixby doesn't know if the user can see results, Bixby should repeat back the most important parts of what the user said. This way, Bixby is not burdening users with too much information while the user also knows that they were heard correctly.

- Screen: Display a view of the created object so the user can make sure the details are correct

- Situational:

- Include an option to edit, either a response if the user says to "change" or as a button on the screen. Rather than interrupt a user's flow by telling them by the way, you can change..., ensure that Bixby can still accommodate this common request if asked.

- If the user wishes to delete data, follow up with a Confirmation pattern.

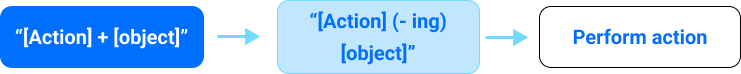

Simple Action

One of two actions occurs (yes/no, on/off), typically interacting with another device that provides real-world feedback, such as lights.

Simple Action Examples

User: "Turn on the lights"

Bixby: Turning on kitchen lights.

User: "Call Tim"

Bixby: Calling Tim Smith.

Simple Action Guidelines

- User goal: Change the current state of a device or experience with explicit feedback from Bixby.

- Requirements:

- Voice: When the action's result is not immediately obvious through other feedback, such as turning on the lights in a different room or changing the air conditioning settings, Bixby should confirm that the action requested is happening or about to happen by using a sound or explicit dialog ("Preheating oven to 350 degrees"). This gives users certainty about what is happening to their devices or environment.

- Screen: Demonstrate changed action state by punching out to a relevant capsule or showing how controls have changed.

- Situational:

- It is up to the developer to gauge how specific the dialog needs to be, given the user's end goal. For example, if it is known that the user is controlling a device from the same room, changing that device's state gives the same information as Bixby stating something changed. In this case, it might not be necessary to include Bixby dialog (see instant reaction).

- If necessary, Bixby will ask follow-up questions to clarify (see Missing Info or Disambiguation).

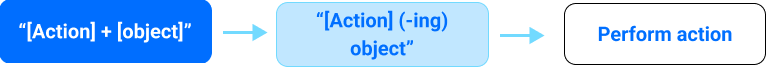

Instant Reaction

Similar to Simple Action, but in cases where no dialog is necessary because it would interrupt an experience, or the completed action clearly occurred.

Instant Reaction Examples

User: "Increase volume"

Bixby: (audible noise or visual indicator volume increased)

User: "Turn on the TV"

Bixby: (TV turns on)

User: "Turn on the light"

Bixby: (Light turns on)

User: "Open YouTube"

Bixby: (opens the YouTube app)

Instant Reaction Guidelines

- User goal: Change the current state of a device or experience without explicit feedback from Bixby.

- Requirements:

- Voice: Bixby's ultimate goal is to help the user quickly in a relevant way. When the new state of the device or experience is obvious without explicit confirmation, such as turning on the lights in the same room, Bixby should not use dialog that delays the completion of the action (such as saying "turning on the lights" rather than just turning them on). Keep dialog short (such as simply saying "okay"), or communicate the change of action with a simple sound, visuals, or haptics.

- Screen: Demonstrate the changed action state by punching out to the relevant capsule or showing how controls have changed.

- Situational: This pattern works well if you have to punch out to a capsule quickly without using Bixby dialog in-between.

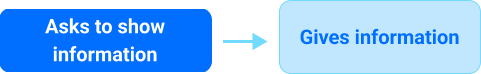

Look-Up

Similar to a query result where more than one possible option or follow-up action can occur.

Look-Up Examples

User: "What's the weather like today?"

Bixby: It's partly cloudy and 67 degrees right now.

User: "When is Christmas?"

Bixby: Christmas day is Tuesday, December 25.

Look-Up Guidelines

- User goal: Find relevant information about the topic asked.

- Requirements:

- Voice: To be a helpful assistant, Bixby needs to provide context to its answer. Provide context either by repeating back part of the question or tailoring it to user preferences. Since users want quick, relevant answers, Bixby should also limit its answer to about 15 seconds (one or two sentences). Always give the option to "hear more" or redirect to another page if there is additional information that Bixby didn't read out or would be better seen with a screen. For example, if someone asked about the weather, saying "in [user's city]" adds extra clarity.

- Screen: Show views or text of the result. Provide navigation options for users to read more or refine their search.

- Situational: Since answers could involve various types of data, ensure that Bixby is presenting it in a way that the user will understand. One way to know is by accepting certain formats (numbers, times) from the user and having Bixby provide answers in that same format.

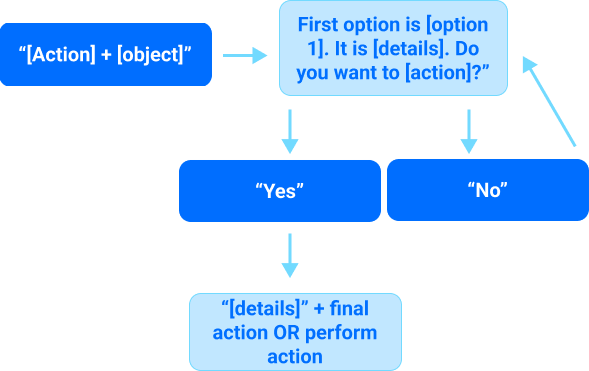

Suggestion

Bixby provides a suggestion using personalization and learning to narrow down choices after a search.

Suggestion Examples

User: "Find gas stations near me"

Bixby: The closest gas station is Shell San Jose Downtown. It's 0.5 miles away and has a 5-star rating. Do you want this one?

User: "Find chicken recipes"

Bixby: This oven-roasted chicken takes 30 minutes to prepare and serves 4. Do you want to choose this?

User: "Play music"

Bixby: The most popular music app is Spotify. Listen here?

User: "Sure"

Suggestion Guidelines

- User goal: Find the best option for a specific search.

- Requirements:

- Voice: Suggestion is powerful since it is a natural part of human conversation and showcases Bixby's abilities as an intelligent assistant. This pattern relies on Bixby's ability to query a subset of options. The way Bixby curates the suggestion is up to the developer and how Bixby interprets the user's request. After providing the first option, there should be additional details, ending with a call to action ("Do you want to choose this?"). If the user says "No" to the initial suggestion and many options are remaining, consider the List reading pattern.

- Screen: Highlight or display the suggestion. If there is a list, then ensure the user can select between items by tapping or scrolling.

- Situational:

- Personalization and learning are powerful tools when they incorporate past user behavior or preferences into a query result.

- Ensure that the functionality exists to incorporate data types, since the user might need to give special access to location sharing, for example.

- Optimize the sort order of lists so that the most relevant results are read to the user first, such as sorting by closest gas station rather than the one with the highest ratings.

- Users might want to refine their search. Make sure that new suggestions are available if the user wants to be more specific in their follow-up search.

List Reading

Bixby provides a list without asking the user to take action.

List Reading Examples

(not reading) User: "Show shopping list"

Bixby: [shows view]

(reading) User: "Check reminders"

Bixby: Go to DMV tomorrow at 9 AM and file tax returns Friday at 9 AM.

List Reading Guidelines

User goal: Get relevant pieces of information about the topic asked.

Requirements:

Voice: People don't like hearing long lists, so here is where SSML can be used to increase the reading speed. Lists can be read in a variety of ways depending on how many items there are, similar to disambiguation. The differences in list reading depend on the data types being read and how detailed the user needs a response.

Bixby should read up to 3 items within the same category before giving the option to hear more. This reduces the cognitive load on the user.

Bixby: At 9 AM, interview. At 11 AM, call Rachel. At 1 PM, pick up lunch. Want to hear more?

If there are many items in a list, it could help to give a high-level summary before introducing more options:

User: "Check notifications" Bixby: 10 notifications from Slack, Twitter, CNN, and two others. Do you want to hear Slack notifications?

See more on Summary and Fluid Reading.

Screen: Provide full details, but not everything needs to be read out loud. Things will be highlighted as they are read for users to follow along.

Situational:

- For complicated list items or large amounts of text, Bixby can tell users to see the screen for more information (for example, reading data privacy guidelines).

- Disambiguation is distinct from list reading because while disambiguation might require a list of options, it is only used to help the users choose between similar options. List reading in this case, however, is used to inform the user of multiple pieces of data or results. The user might take action after hearing the results. However, this action would likely be treated as a Simple Action or Simple Creation.

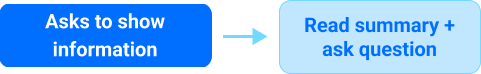

Summary and Fluid Reading

Bixby reads a summary of relevant information, then prompts to provide organized information at the user's pace.

Summary Examples

User: "What is my schedule today?"

Bixby: You have 16 events from 9 AM to 7 PM. At 9 AM, interview. At 11 AM, call Rachel. Want to hear more?

Summary Guidelines

- User goal: Hear an overview of a requested topic.

- Requirements:

- Voice: Using a high-level summary keeps information organized, simple, and efficient. Just as an assistant would give someone a quick rundown of their busy day, Bixby assumes that all users are limited on time and accommodates in the same way. If there are many items in a list, Bixby starts with a high-level summary before introducing more options. This follows a similar format to general list reading.

- Screen: Summaries in the form of aggregated data/views help make information scannable to the user. Including Bixby's summary sentence on the screen before or alongside all results also contextualizes the experience.

Patterns for Bixby's Needs

Bixby acts as an assistant during a conversation. This means that Bixby seeks to understand what the user is asking for and then acts on it in a way that is helpful, contextual, and natural.

If the user is asking to do something difficult to reverse, like sending a text or payment, a Confirmation is the way to go. Sometimes to complete something, Bixby will also need to ask for Missing Information or prompt the user to give more details.

Bixby can use Disambiguation to help users choose between similar options. When Bixby hears something that doesn't quite make sense, a General Reprompt can get the conversation back on track. Or in cases where users aren't sure how to answer, Bixby can use No Response dialog to continue to the conversation.

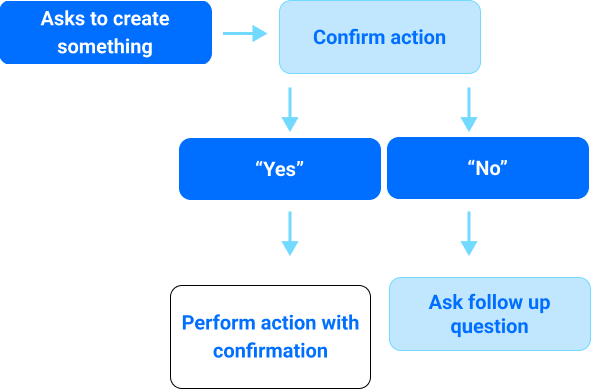

Confirmation

Bixby prompts the user to make sure they are certain about a sensitive request, such as sending a message or making a purchase.

Confirmation Examples

User: "Send 'hey what's up?' to Tim"

Bixby: "Hey what's up?". Ready to send?

User: "Order a small iced tea from Flower Cafe"

Bixby: That will be $3. Pay with your usual credit card?

User: "Sure"

Bixby: Great. Your order will be ready in 10 minutes.

Confirmation Guidelines

- User goal: Create something, typically involving another person or party.

- Requirements:

- Voice: Whenever someone is about to do something that is difficult to reverse, Bixby wants to be sure it is the correct action. Bixby can actively confirm by repeating back the most important aspects of what the user said and asking if it was heard correctly. Once the user agrees that this is correct, Bixby can complete the action.

- Screen: Include buttons for the user to take action, along with a display of what the user said or entered so they can confirm Bixby is correct.

- Situational: If the user disagrees with Bixby's interpretation of the action (they say "no" when Bixby asks for confirmation), Bixby should ask a follow-up question to have the user try again.

Missing Information

The user answers Bixby's questions, but leaves out information required for Bixby to move to the next step.

Missing Information Examples

User: "Start timer"

Bixby: How long?

User: "Add an event for Thursday"

Bixby: What time?

Missing Information Guidelines

- User goal: Quickly create something with Bixby.

- Requirements:

- Voice: To be a helpful assistant, Bixby needs a certain amount of information from users. When there is not enough information to complete the task, Bixby asks a targeted question seeking the information that is missing. If Bixby only interprets answers based on a specific format (such as date or time), then give examples: "What time? You can say something like noon or 1 PM."

- Screen: Display a view of the utterance with blank spaces or error states around where the missing information should be filled.

- Situational: Sometimes, the user does not provide the missing information in a format that Bixby understands. If this happens, then Bixby will use reprompts to clarify.

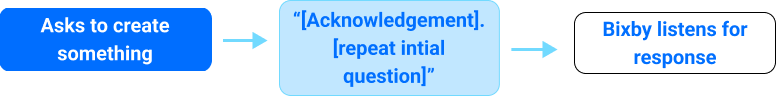

General Reprompt

If Bixby doesn't understand a user's intent or utterance, Bixby will rephrase the question to continue the conversation.

General Reprompt Examples

User: "Play music"

Bixby: Do you want to play Spotify?

User: [something Bixby doesn't successfully parse]

Bixby: I didn't catch that. Where do you want to listen?

General Reprompt Guidelines

- User goal: Communicate clearly to Bixby in order to create something.

- Requirements:

- Voice: To continue a conversation after misunderstandings or silence, Bixby must ask follow-up prompts to obtain an answer. After the first question Bixby asks after the user gives a command, a user might give no response or say something that Bixby doesn't recognize. Bixby should acknowledge the problem without blaming the user (saying something such as "I didn't catch that"). Then, Bixby will give another question, seeking to answer the initial prompt.

- Voice: If there are more no matches or no responses, then Bixby can reprompt in a more tailored way based on a particular capsule or scenario. It is up to the developer to ask for reprompts. Read about the No Response pattern.

- Screen: Display choices for the user to get them to the next step. Provide instruction text that is similar or identical to the reprompt question Bixby is asking verbally.

- Situational:

- This pattern is slightly different from progressive help (see Disambiguation). In progressive help, Bixby gives specific examples to guide a choice. While the goal is the same as a general reprompt (getting the user to answer appropriately), the application is more specific than generalized as it is here.

- For how Bixby responds in case of multiple reprompts or misunderstandings, see No Response.

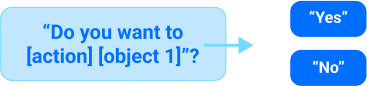

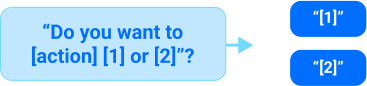

Disambiguation

Bixby provides options that the user can choose from, especially when the items are named with similar-sounding or identical words, or must be chosen from a set of closely-related items such as household lights or coffee shop locations.

Disambiguation Examples

User: "Call Tim"

Bixby: Do you want to call Tim Smith?

User: "Call Tim"

Bixby: Do you want to call Tim Smith or Tim Andrews?

User: "Call Tim"

Bixby: Do you want to call Tim Smith, Tim Andrews, Tim Wong, or hear more?

User: "When is my lunch meeting?"

Bixby: Do you mean the lunch meeting on Thursday or Friday?

Disambiguation Guidelines

User goal: Complete an action after ensuring that Bixby is choosing the right option.

Requirements:

Voice: Disambiguation is how Bixby helps users choose the correct option while staying conversational. The designer and developer can disambiguate in many ways, depending on how many options are available.

If there are two options, it is usually best to use a "this or that" approach:

Do you want to [action] [object 1] or [object 2]? If the objective is clear, Bixby could just present the two options: [object 1] or [object 2]?

When there are three or more, Bixby presents items as a list, typically grouping them by threes before moving on to the next set:

Do you want to [action] [object 1], [object 2], [object 3] or hear more?

If Bixby functionality is available to make a guess (similar to a suggestion) as to which option the user wants, perhaps based on previous behavior, then do so.

Do you want to [action] [prediction 1]?

Situational: If a user says "no" to any of the disambiguation options, return to the original prompt. If Bixby doesn't understand what the user is saying, see No Response, below. It is up to the developer to choose how many times Bixby reprompts before ending the conversation.

No Response

Provides options after a General Reprompt if the user says something that Bixby can't match to an intent.

No Response Examples

User: "Play music"

Bixby: Do you want to use Spotify, TuneIn Radio, or iHeartRadio?

User: [something Bixby doesn't successfully parse]

Bixby: There's Spotify, TuneIn Radio, or iHeart Radio. Which do you want?

User: [something else Bixby doesn't successfully parse]

Bixby: You can say "first one" for Spotify, "second one" for TuneIn Radio, and so on. What would you like?

No Response Guidelines

User goal: Move along with Bixby in the intended direction or topic.

Requirements:

Voice: There are different ways to help a user achieve their goal when Bixby cannot match utterances to intents.

There might be different responses in scenarios where a user stays silent after a question rather than saying something Bixby doesn't understand. The system default is to have Bixby respond with the same question as it would in General Reprompt, but you can supply a variation for cases when a user is silent using the

no-response-messagekey in the input view.If Bixby recognizes a phrase at a certain confidence level (for instance, it is 75% sure what the user said) then Bixby can use partial matching.

Bixby: Do you want to use Spotify, TuneIn Radio, or iHeartRadio? User: "[something] radio" Bixby: Did you mean iHeart Radio?

If the confidence level is not available, then Bixby can provide follow-up questions similar to general reprompt, but with more targeted questioning. One way is by repeating the initial prompt with options:

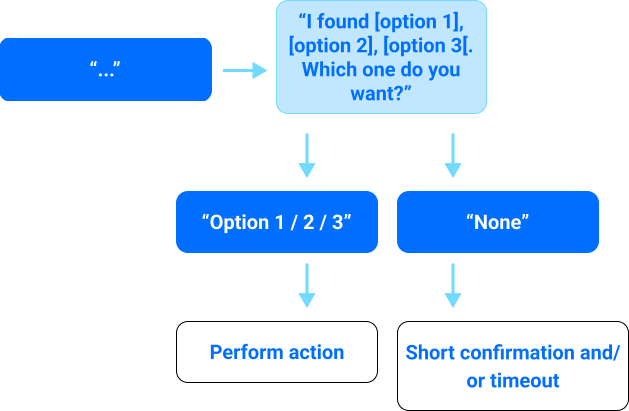

Bixby: I found [option 1], [option 2], [option 3]. Which do you want?

Another way to give reprompts is having Bixby say sample utterances to give the user a clearer direction:

Bixby: You can say "first" for [option 1], "second" for [option 2], and so on. What would you like?

Use the

currentRepromptCount()Expression Language function with conditionals to provide more explicit guidance on successive reprompts.

Screen: Keep the same options available on the screen with options for a user to exit. If Bixby verbally states other ways of phrasing things, ensure that the options are written the same way on the screen to avoid confusing the user.

Situational: Do not prompt more than three times total (one general reprompt and two after). Once these turns are reached, it is acceptable to end the experience. It is up to the developer and designer to phrase reprompts in a way that does not blame the user, provides examples of what users can say, and gives users opportunities to correct themselves.