Training for Natural Language

This documentation applies to version 2.1 of the Training Editor. On older versions of Bixby Developer Studio, selecting training in a subfolder of the resources folder might open the legacy editor. This editor is deprecated, and support for it was removed in Bixby Studio 8.21.1.

If what you're looking at doesn't match the screenshots in this documentation, update to the newest version of Bixby Developer Studio!

Bixby uses natural language (NL) from the user as input. You can improve Bixby's ability to understand NL input by training Bixby to understand real-world examples of natural language in Bixby Developer Studio (Bixby Studio). For example, in the Quick Start Guide, you train the dice capsule to recognize "roll 2 6-sided dice". This phrase is an utterance. NL training is based on utterances that humans might type or say when interacting within Bixby. Utterances don't have to be grammatical and can include slang or colloquial language.

For any capsule you create, you'll need to add training examples in Bixby Studio for each language supported. If you are planning to support various devices, you need to add training for those devices too. Training examples can also specifically target supported Bixby devices (such as mobile) and specific regions that speak a supported language (for instance, en-US for the United States and en-GB for Great Britain). Bixby converts unstructured natural language into a structured intent, which Bixby uses to create a plan.

In this introduction, you'll learn how to plan and add training. You'll also learn how to search through training entries effectively, and use aligned natural language to test utterances. Finally, you'll learn how to add vocabulary that can assist in training.

For more information on effective training guidelines and best practices, see Training Best Practices.

Training files are not meant to be edited outside of Bixby Studio, and doing so can introduce syntax errors. If Bixby Studio reports an error, try removing the problematic training entry and re-entering it through the training tool.

"Training entries" can also be called "training examples," and you might see either term in Bixby's documentation.

The Training Editor

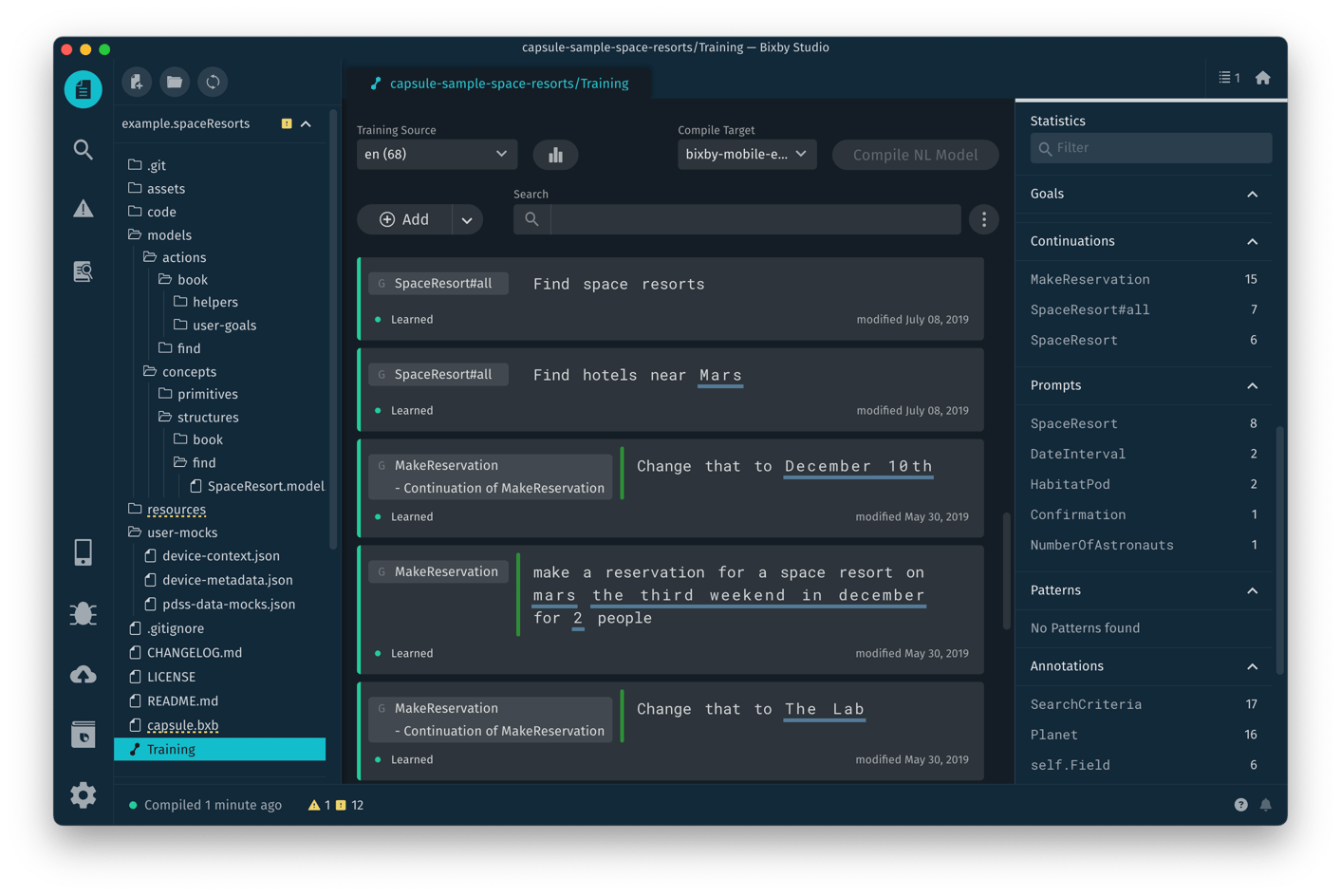

To use the training editor, select Training in the Files sidebar of Bixby Developer Studio. The editor opens to the Training Summary.

When you select the root-level Training item in the sidebar, all training entries will be displayed. If you select a Training folder within the resources folder, the editor will open with the Training Source set to the corresponding resource directory, such as en-US.

Training Summary

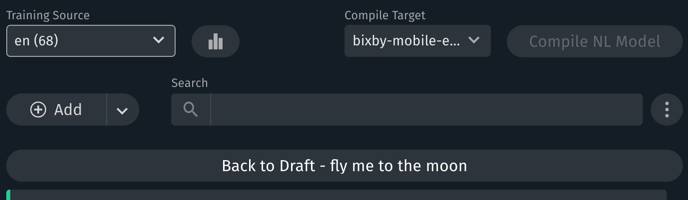

The Training Summary page shows a list of training entries for a given training source. You can add new entries, edit existing entries, and compile a Natural Language model for a specific capsule target. In addition, you can click the Statistics button to the right of the training source drop-down to see how many entries apply to each defined capsule target and the remaining "budget" of training entries within each one. (See Training Limitations for a discussion of budgets.) To the right side of the summary page is the filter sidebar, which shows an overview of the training entries in the selected training source and allows you to quickly filter the summary by status, entry types, and more.

The training source is a resources folder specific to the language and optionally locale and device, such as en, bixby-mobile-en-US, or ko-KR. You can both select existing sources and add new ones with the Training Source menu on this screen. The compile target is the combination of language, locale and device, such as bixby-mobile-en-US.

When a training source is selected, the summary page shows the existing training entries for that source. For each training entry, you'll see the following information:

- The utterance the entry is trained on, with its values highlighted. Values correspond to concepts you've modeled within your capsule, such as a reservation date or hotel name.

- The entry's goal. Goals also usually correspond to concepts, such as a completed reservation, but can correspond to an action.

- If applicable, the entry's specialization.

- The entry's training status.

A target can have dozens, hundreds, or even thousands of training entries. You can search and filter the list in two ways:

- Use the search field at the top of the list to show only entries that contain specific text, as well as search filter keywords

- Use the right-hand sidebar to filter by learning status, goals, prompts, patterns, and more

From the Training Entry List, you can add a new training entry, as well as recompile the natural language model.

New Training Entries

After you've modeled your capsule's domain, defining the concepts, the actions, and the action implementations, the training is what pulls it all together. The user's utterance provides the natural language (NL) inputs to Bixby's program, and the goal associated with that utterance tells Bixby what the output of the program needs to be. A training example consists of a sample utterance annotated to connect values to your capsule's concepts and actions. Bixby uses the utterance and annotations to produce an intent.

With good training examples, Bixby will do more than simply memorize the words and their meaning. It will learn the most important aspects of those examples and apply that knowledge to new words and sentences. Natural language training teaches the platform about sentence meaning.

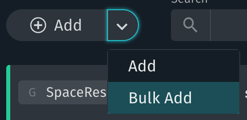

Add new training from the Training Summary screen by clicking the Add button. (The drop-down arrow on the button lets you select Bulk Add for adding multiple entries at once, and switches the button between single entry and multiple entry modes.)

The Add button will create an entry in the currently selected training source. Make sure you have selected the correct source folder to add a new training entry in before clicking Add.

When adding training entries, you should select the most widely applicable training source for the entry you wish to create. For example, if the utterance is in English and will not be either locale or device dependent, select en.

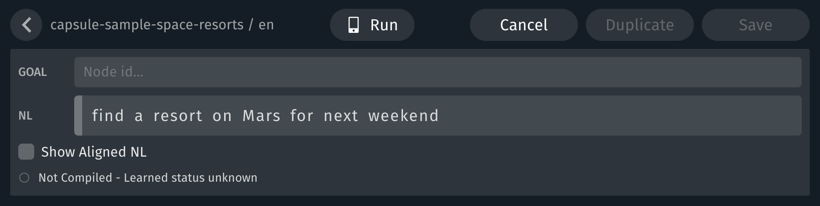

You will first be prompted to add the utterance you wish to train on. An utterance is a phrase or sentence, appropriate to the training example's language and locale, that directs Bixby toward the training example's goal:

- "Find me a Hawaiian restaurant"

- "Book a table for 4 on Saturday at 7pm"

- "Find a resort on Mars for next weekend"

After entering the utterance, click Annotate (or press Ctrl/Cmd + Enter). To annotate the utterance, you'll need to do the following:

- Set a goal for the utterance

- Identify values in the example's utterance

- Verify the plan that Bixby creates for the example

Let's look at each step in more detail.

Set a Goal

Goals are usually concepts. If your capsule includes a Weather concept that contains all the information for a weather report, then when the user asks "what's the weather", the goal is the Weather concept.

Sometimes, though, goals might be actions. This usually happens when the user is purchasing a product or a service in a transaction. For example, a capsule that books airline flights would have training examples that use a BookFlight action as a goal.

Concepts and actions used as goals in Natural Language training must always belong to the capsule that declares them. A concept or action declared in an imported library capsule cannot be used as a goal in the importing capsule. However, you can extend a concept in your importing capsule, and use the child concept as a goal.

Identify Values

To reach a goal, Bixby often needs input values: the restaurant, date, and time of a dinner reservation; the location and day for a weather report. The user's utterances often contain some of the necessary values:

- "Find me a Hawaiian restaurant"

- "Book a table for 4 on Saturday at 7pm"

- "Find a resort on Mars for next weekend"

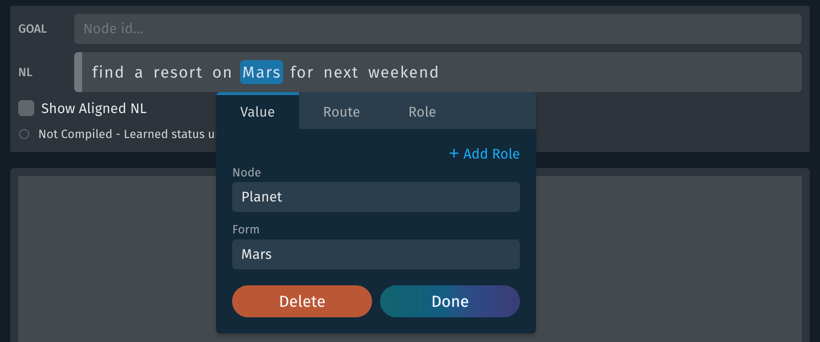

To help Bixby identify values in an utterance, you annotate the values, matching them to concepts in your capsule or in Bixby library capsules.

- "Hawaiian" is

example.food.CuisineStyle - "4" is

example.reservation.Size - "Saturday at 7pm" is

viv.time.DateTimeExpression - "next weekend" is

viv.time.DateTimeExpression - "Mars" is

example.spaceResorts.Planet

To annotate a value in an utterance, click or highlight the value in the utterance and click the Value tab in the menu that appears. Then, enter the concept this value is associated with in the Node field. For enumerated values, you'll also need to enter a valid symbol in the Form field; if the value is "extra large" and this matches a Size of ExtraLarge, the Form should be ExtraLarge. The autocompletion for the Node field will provide a list of valid forms delimited by a colon, such as Size:ExtraLarge or, in the following example, Planet:Mars.

Enumerated values must either match symbol names exactly, or be an exact match to vocabulary for that symbol.

The values you annotate in an utterance should match primitive concepts, not structure concepts. If you need a structure concept as an input for an action, create a Constructor that takes the primitive concepts that match the properties of the structure concept as inputs, and outputs the structure concept. See the Constructor documentation for an example.

You don't need to explicitly tell Bixby to use the constructor action; the planner uses the action in the execution graph when it needs to construct a concept.

If a goal is common to multiple targets, but has different parameter input values, do not try to handle the differentiation between targets using training or modeling. Instead, have your action implementation catch this as a dynamic error.

In addition to annotating utterances with values, you can also annotate them with routes. Read the Routes section for information on when you might use routes (and when you shouldn't).

You can also use keyboard shortcuts for annotating values and routes: use Opt+V and Opt+R respectively on a Mac, and Alt+V and Alt+R respectively on Windows or Linux.

What about sort signals? Earlier versions of Bixby supported annotating sorting utterances, like "sort by price" or "show me the nearest hotels", with sort signals. This has been deprecated. Instead, use sort-orderings for including sorting functionality in concepts, or the sort block for sorting output from actions.

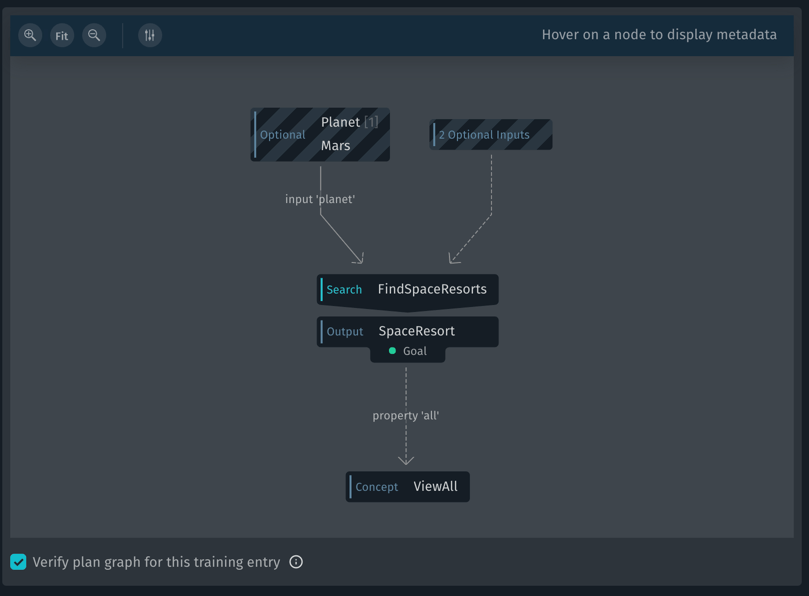

Check the Plan

Once you have identified all the values in the utterance, confirm that the plan looks like it should. Here's the plan for "Find hotels near Mars" from the Space Resorts capsule:

This is a simple plan, where the inputs feed into actions that resolve the goal. Make sure the goal is correct and the inputs have the right types. If you aren't sure, go ahead and run it in the device simulator by clicking the Run on Simulator button. If anything in the plan looks odd, executing it often exposes the problem.

Draft Entries

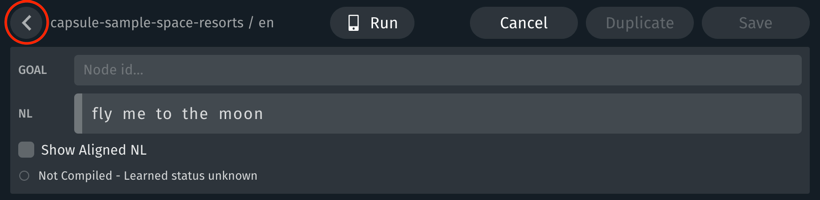

When you are creating a new entry, clicking the back arrow in the upper left corner will return you to the summary screen, but save your work as a draft.

When a draft is saved, it will appear as an option on the summary screen.

Clicking the Cancel button will return to the summary screen without saving the new entry as a draft, and will discard any existing draft.

You can only have one draft saved at a time, and cannot start a new entry when a draft is saved. Starting a new entry with a saved draft will warn you that this action will discard the existing draft and replace it.

Changes to existing entries, rather than new entries, will not be saved as drafts.

Duplicate Entries

You can duplicate an existing training entry by clicking on it to open the editing window and clicking Duplicate. This will save any existing changes in the entry and create an exact copy in the current target. Note that the Duplicate button only works when the entry is in a state where it can be saved.

To copy or move one or more entries between targets, locales, or languages, it's faster to use the Copy or Move Batch Actions.

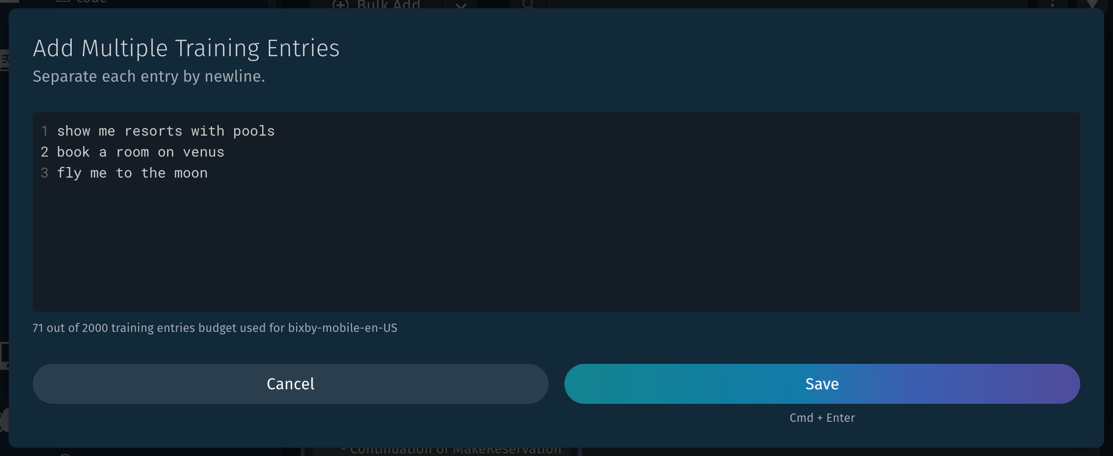

Bulk Add

Clicking the drop-down arrow on the Add button will allow you to select Bulk Add to add more than one training entry at a time.

This opens the Multiple Training Entries dialog. You can enter multiple entries here, separated by newlines. If you use Aligned NL in an entry, it will be created with the given annotations.

New entries created without Aligned NL, or created with invalid Aligned NL, will have compilation errors. In either case, they will appear in the Issues section of the Statistics sidebar, and you can quickly find them by clicking Compilation Errors in that section.

The Add button will remain in the last mode selected in its drop-down: after Bulk Add is selected, it becomes a Bulk Add button. Use the drop-down to change it back to the Add mode.

Specializations

Some utterances require more information in your training example for Bixby to process them correctly. A user might say something that requires the context of the previous utterance, or Bixby might need to prompt the user for information needed to complete the initial request. These cases are handled by specializations.

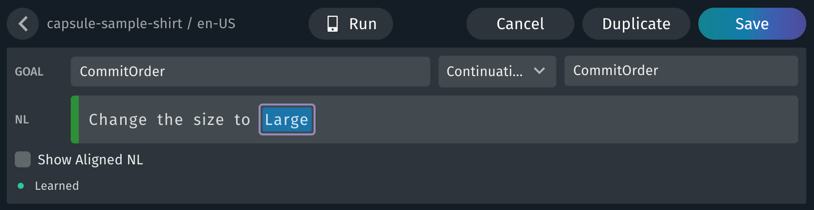

Continuation Of

After a user says something like "buy a medium shirt", they could follow it up with "change the size to large". When a user follows up their previous utterance with a related request or refinement, this is a continuation. A continuation is an utterance that only makes sense in the context of the previous utterance.

A continuation needs to specify two nodes:

- The goal the continuation should reach. This is specified in the "Goal" field, just like any other training example.

- The goal of the utterance the continuation is refining. This specifies what this example is a "continuation of".

To train "change the size to large" in the shirt store sample capsule, you specify the following:

- The goal of this training example is the

CommitOrderaction. - The utterance is a continuation of a previous utterance whose goal is also the

CommitOrderaction, so we specifyCommitOrderagain in the "Continuation of" field.

The program generated by the previous utterance doesn't have to reach its conclusion before the continuation is acted upon. In the Shirt capsule example, the user is presented with a confirmation view before the CommitOrder action finishes executing, and that's when the user might change the order's details with a continuation utterance. In other capsules, the first utterance's execution might have fully completed before a continuation. For example, a weather capsule could support the utterance "give me the weather in London" followed by the continuation "what about Paris".

Continuations are a common use case for routes; in the previous example, the Flags list for this training entry shows a route through the UpdateOrder action, which modifies the existing saved order. Read about Routes for more information (and alternatives to using routes). In addition, "Large" has been given a Role of ChangedItem; read about Adding Context with Roles for more information.

You should only train utterances as continuations when they don't make sense on their own. A continuation must have a direct, clear relation to a previous utterance.

For more examples, see the Continuation for Training sample capsule.

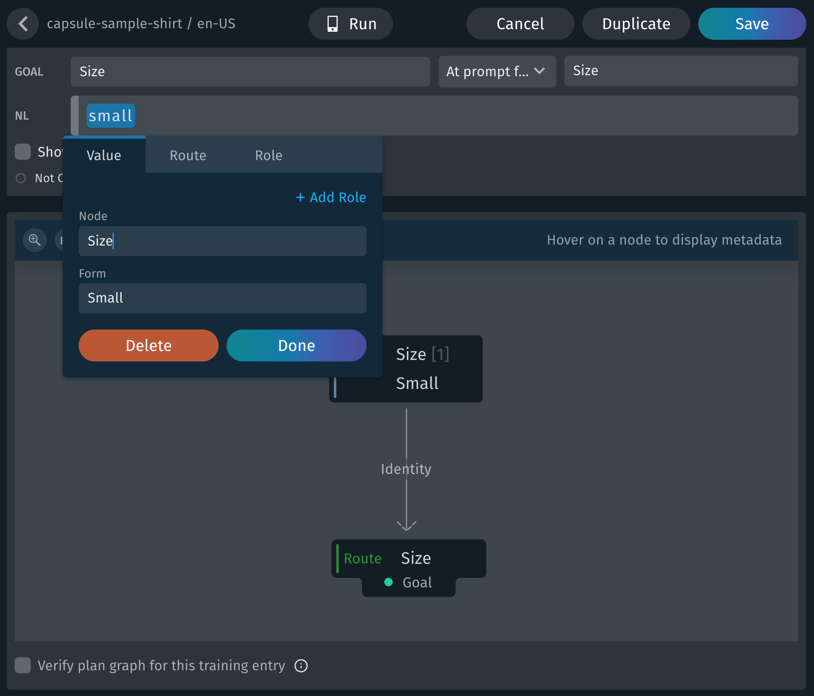

At Prompt For

When there isn't enough information to reach a goal, Bixby prompts the user for further information. For example, suppose in the previous Shirt example, instead of "change the size to large" the user had only said "change the size". Bixby needs to know what to change the size to, and asks the user for this information. As the developer, you can account for these prompts by adding examples of how the user would respond to a prompt.

To make a training example for a prompt, add an example of a response to a prompt. Click Add, enter small in the training example field, and click Annotate. This is a response to a prompt for example.shirt.Size, so enter Size as the goal. From the No specialization menu, choose At prompt for:

In this case, Size is both the goal of this training example and the specialization node. The text small in the utterance is annotated as the node Size and the form Small.

Pattern For

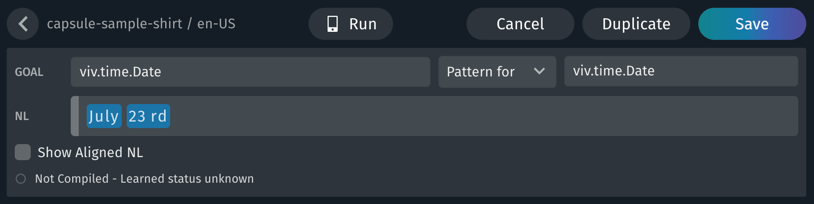

Patterns provide vocabulary for structured concepts. They are not real training examples, but are rather treated like vocabulary entries in a training-like format.

When you add training, you must ensure that all training entries that are patterns are learned. Otherwise, submission will fail!

Consider DateTime training. The viv.time DateTime library capsule uses patterns so that developers who import it only have to train one main type: viv.time.DateTimeExpression. Inside viv.time, training consists of patterns that map to the DateTimeExpression concept. The patterns are all variations of ways people could refer to DateTime objects. Patterns do not use machine learning, but are simpler templates whose pieces are matched explicitly.

Consider these training examples:

Example 1:

NL Utterance: "2:30 p.m. this Friday"

Aligned NL:

[g:DateTime:pattern] (2)[v:Hour]:(30)[v:Minute] (p.m.)[v:AmPm:Pm]

(this)[v:ExplicitOffsetFromNow:This] (friday)[v:DayOfWeek:Friday]The pattern being matched here is:

[hour]:[minute] [AM or PM] this [day of week]Example 2:

NL Utterance: "July 23rd"

Aligned NL:

[g:viv.time.Date:pattern] (July)[v:viv.time.MonthName:July] (23rd)[v:viv.time.Day:23]This matches a simpler pattern:

[month] [day]Here is the training entry in the editor for Example 2:

Note that both goals in the examples (the left goal and the right Specialization Node... goal) are the same: viv.Time.Date.

The pattern in Example 2 will not match an utterance of "the 23rd of July"; that will need to be matched by a different pattern. Multiple patterns can exist for the same goal.

Another feature of patterns that's distinct from normal training is that patterns can be reused in other training examples, and even in other patterns. The previous date patterns could be included in a pattern for DateInterval, allowing Bixby to understand ranges like "January 1st through January 7th".

All these different DateTime intent signatures should be interchangeable, for the most part, without having to train all of the possible combinations of DateTime inputs in your capsule.

Patterns are designed for matching language elements that are regular and structured; if an utterance exactly matches a pattern, Bixby can always understand it. Regular training entries allow for less regular speech patterns and variations to be understood through machine learning, where it's not practical to define every possible variation.

You might need to train a few varying lengths of phrases pertaining to the structure concept (in this example, DateTime) to ensure that they get matched.

Remember that if your capsule needs to handle dates and times, you do not need to actually create patterns for them! Instead, use the DateTimeExpression concept from the DateTime library.

For more advanced topics relating to capsule training, read Advanced Training Topics.

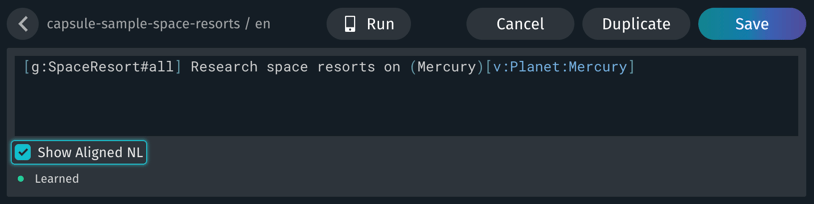

Aligned NL

You've already likely seen examples of Aligned NL, a plain text representation of utterances that include the annotations for goals, values, and routes. When you annotate an utterance in the training editor, Bixby creates Aligned NL. Bixby also generates Aligned NL when processing a real query. If you're adding training entries (singly or in bulk), you can also use Aligned NL. The training editor supports autocompletion of Aligned NL.

You can use Aligned NL in the Device Simulator to give Bixby queries, even when you haven't compiled the current NL model. The training editor can switch between displaying and editing annotated NL and the Aligned NL for utterances by toggling the Show Aligned NL checkbox:

Here are some other examples of Aligned NL utterances:

[g:viv.twc.Weather] weather on (saturday)[v:viv.time.DateTimeExpression] in

(san jose)[v:viv.geo.LocalityName]

[g:flightBooking.TripOption] I need a flight from {[g:air.DepartureAirport]

(sjc)[v:air.AirportCodeIATA:SJC]} to (lax)[v:air.AirportCodeIATA:LAX]

(tonight)[v:time.DateTimeInterval]Annotations are denoted in brackets. The first character indicates a signal, the kind of annotation, followed by a colon and the concept the annotation refers to. Words or phrases might follow the annotations, grouped in parentheses like "san jose" previously. Subplans are enclosed in braces. There are four kinds of annotation types:

g: goal signalv: value signalr: route signalf: flag signal

To process a request, Aligned NL is translated by Bixby into an intent. You can see the intent for a request in the Debug Console. The intent for the "research space resorts on Mercury" request above is as follows:

intent {

goal {

1.0.0-example.spaceResorts.SpaceResort#all

@context (Outer)

}

value {

1.0.0-example.spaceResorts.Planet (Mercury)

}

}You can enter Aligned NL rather than an utterance in the new training example field.

After a training entry is saved, you cannot edit its NL representation, but you can edit the Aligned NL.